criminosis

Explore posts from serversJJanusGraph

•Created by criminosis on 7/10/2024 in #questions

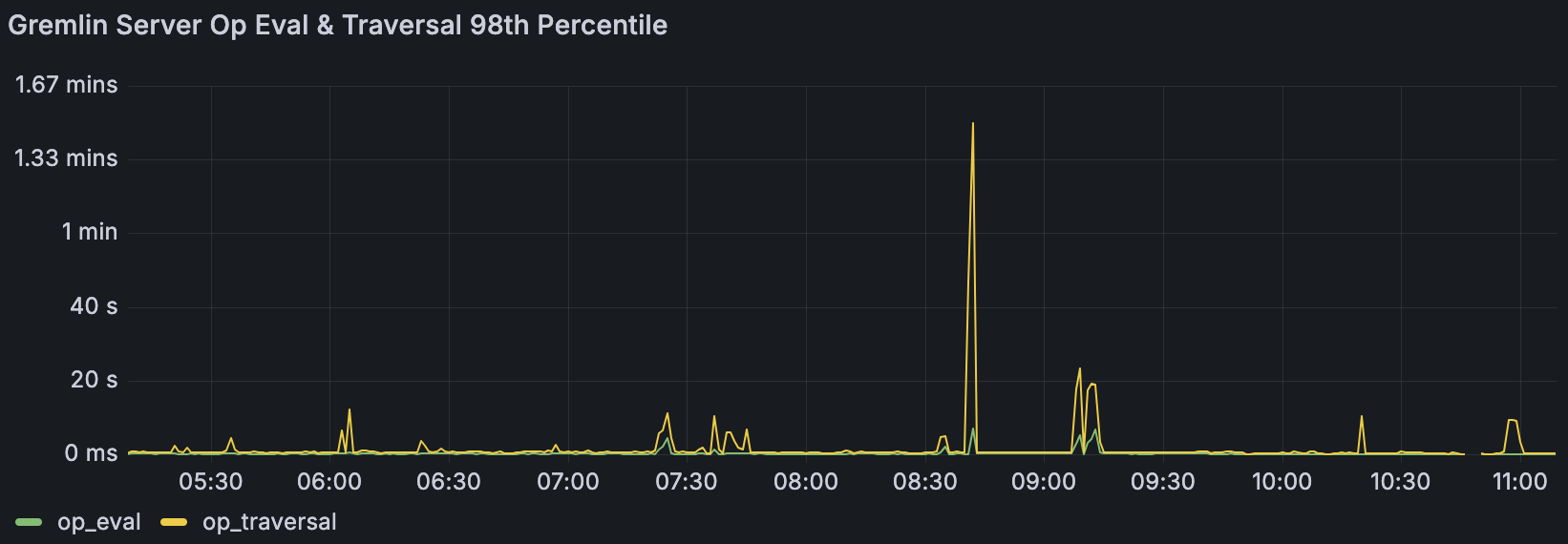

MergeV "get or create" performance asymmetry

So I'm working on adding the mergeV step among others to the Rust gremlin driver. As part of that I took a pause and did a performance comparison to the "traditional" way of doing it.

So the Rust driver is submitting bytecode that's effectively doing:

"Traditional/Reference":

^ But given a batch of 10k vertices to write it'd do this for a chunk of 10 vertices in a single mutation traversal, but doing 10 connections in parallel to split up the batch until it finished getting all 10k written. It's well known that very long traversals don't perform well and my own trials found that doing this at > 50 vertices in a single traversal would cause timeouts for my use case, so I've been generally doing 10 and calling it good.

But this puts a ceiling on the amount of work a single network call can make (10 vertices worth) so hence why I started trying out

mergeV() to stack more info into a single call without making the traversal prohibitively long.

And then the "mergeV()" way:

I would run the mergeV call with chunks of 200 vertices in each call.6 replies

JJanusGraph

•Created by criminosis on 5/24/2024 in #questions

Comma Separated Config Options Values Via Environment Variable?

Hey @Bo & @porunov I was trying out the retry logic added by my PR (https://github.com/JanusGraph/janusgraph/pull/4409) by setting environment variables in my docker compose like so:

janusgraph.index.some_index_name_here.elasticsearch.retry-error-codes=408,429

Much like was done in the unit test I wrote (https://github.com/JanusGraph/janusgraph/blob/487e10ca276678862fd8fb369d6d17188703ba67/janusgraph-es/src/test/java/org/janusgraph/diskstorage/es/rest/RestClientSetupTest.java#L240). But to my surprise it doesn't seem to be received well during startup:

janusgraph | Caused by: java.lang.NumberFormatException: For input string: "408,429" janusgraph | at java.base/java.lang.NumberFormatException.forInputString(Unknown Source) ~[?:?] ..... janusgraph | at org.janusgraph.diskstorage.es.rest.RestClientSetup.connect(RestClientSetup.java:83) ~[janusgraph-es-1.1.0-20240427-134121.4bddfb4.jar:?]The surprise to me being it's using the same

String[] parsing logic as other commands, which got me curious if others didn't work either. So I tried a more mundane one like

janusgraph.index.some_index_name_here.hostname=elasticsearch,elasticsearch

And it too doesn't seem to get parsed correctly:

janusgraph | Caused by: java.net.UnknownHostException: elasticsearch,elasticsearch janusgraph | at java.base/java.net.InetAddress$CachedAddresses.get(Unknown Source) ~[?:?] .... janusgraph | at org.elasticsearch.client.RestClient.performRequest(RestClient.java:288) ~[elasticsearch-rest-client-8.10.4.jar:8.10.4] janusgraph | at org.janusgraph.diskstorage.es.rest.RestElasticSearchClient.clusterHealthRequest(RestElasticSearchClient.java:171) ~[janusgraph-es-1.1.0-20240427-134121.4bddfb4.jar:?]So just wondered is there a special syntax that's intended here?

33 replies

JJanusGraph

•Created by criminosis on 4/25/2024 in #questions

Phantom Unique Data / Data Too Large?

I've been chasing down a weird writing bug for our graph writer, but I feel like I've stumbled into conflicting realities in my graph and wanted to share in case I'm missing something larger. For context the environment in play is my local development environment, all running inside docker compose (host machine is MacOS, on the off chance it matters).

- JanusGraph 1.0.0

- Cassandra 4

- Elasticsearch 8

I'm writing vertices into a graph. I've got a property that is enforced uniqueness via a composite index with

unique() enabled. I don't have locking turned on, I know that's documented (https://docs.janusgraph.org/schema/index-management/index-performance/#index-uniqueness) but as a single local Cassandra I was thinking that wouldn't be required since there's no other instances to sync with, maybe that's the root of the whole issue.

The vertices also use custom vertex ids derived off this property. My thought was this way knowing the property I could go ahead and issue traversals against the vertex id instead of having to detour to an index, but still leverage the index when it was more convenient.

Trying to rerun some data for processing I'm having a violation of the aforementioned composite unique index, but I'm puzzled as to why.

Using gDotV I'm able to determine the following:

1. Querying based on the property that should use the unique index returns the vertex as expected with the expected custom id. However the properties off the vertex are empty like so when shown in gDotV: "properties": [],

2. Running just a query for the custom id as in g.V('id_goes_here') paradoxically seems to not return the vertex.

So this leaves me in a weird state that seems to be implying:

- The properties of the vertex are empty, but the unique index is still populated with the property, even when the vertex itself is not

- I'm not able to find the vertex with its vertex id13 replies

JJanusGraph

•Created by criminosis on 4/19/2024 in #questions

Mixed Index (ElasticSearch) Backpressure Ignored?

I understand that JG views writes to mixed indices as a "best effort" and documents(https://docs.janusgraph.org/operations/recovery/#transaction-failure) that failures in transactions with regards to mixed indices are left to a separate periodic clean up process after enabling JG's WAL:

If the primary persistence into the storage backend succeeds but secondary persistence into the indexing backends or the logging system fail, the transaction is still considered to be successful because the storage backend is the authoritative source of the graph. ... In addition, a separate process must be setup that reads the log to identify partially failed transaction and repair any inconsistencies caused. ....But I wanted to inquire if 429 Too Many Requests could be omitted from this.

7 replies

JJanusGraph

•Created by criminosis on 2/27/2024 in #questions

Custom Vertex IDs and Serialized Graph

Just wondering if anyone has tried to use custom vertex ids and restore a serialized graph?

g.io("/tmp/foo.kryo").write().iterate()

Then doing the converse with a read() but then it errors with must provide vertex id.

Looking at the serialized file it seems the vertex ids are present, but presumably aren't being propagated during the read?6 replies

JJanusGraph

•Created by criminosis on 2/7/2024 in #questions

Do Custom Vertex IDs Guarantee Single Instance of That Vertex?

Previously I was using a composite index with a unique constraint with locking to try to enforce uniqueness for new vertices, but switching to using that property as a custom vertex ID has a noticeable performance boost.

Just wondering if we're still guaranteed vertex uniqueness that locking previously would (try to) enforce?

I figured it does, assuming that custom vertex id is what's getting serialized to the backend. If so I'm guessing there literally wouldn't be a place for a doppelgänger to exist, but figured I'd ask since it wasn't explicitly stated in the docs after switching to custom vertex ids.

5 replies

ATApache TinkerPop

•Created by criminosis on 2/5/2024 in #questions

Iterating over responses

I've got a query akin to this in a python application using

gremlin-python:

some_property is indexed by a mixed index served by ElasticSearch behind JanusGraph, with at least for the moment about 1 million entries. I'm still building up my dataset so foo will actually return about 100k of the million, but future additions will change that.

If I do the query as written above it times out, presumably it's trying to send back all 100k at once? If I do a limit of like 100, it seems like I get all 100 at once (of course changing t.next() to instead being a for loop to observe all 100).

In the Tinkerpop docs there's mention of a server side setting resultIterationBatchSize with a default of 64. I'd expect it to just send back the first part of the result set as a batch of 64, and I only print 1 of them, discarding the rest.

The Gremlin-Python section explicitly calls out a client side batch setting:

But I'd expect that to just be something if you're wanting to override the server side's default of 64?

Ultimately what I'm wanting to do to is have some large result set requested, but only incrementally hold it in batches on the client side without having to hold the entire result set in memory on the client side.7 replies

ATApache TinkerPop

•Created by criminosis on 1/26/2024 in #questions

Documentation states there should be a mid-traversal .E() step?

Just wondering if I'm missing something, or if the docs are mistaken. It's possible to do a mid-traversal

.V() step. But it seems like a possible copy paste error is in the Tinkerpop docs asserting a similar power exists for an .E() step?

https://tinkerpop.apache.org/docs/current/reference/#e-step

Trying to execute a mid-traversal E() step, at least against JanusGraph from gDotV appears to not register as a valid step, on the off chance that's meaningful context.

Looking around I found an old post from 2020 from I believe @spmallette seems to confirm a mid-traversal E() step is not intended to exist despite the docs implying the contrary which got me thinking the docs may have been unintentionally copy pasted from the V() documentation.

https://groups.google.com/g/gremlin-users/c/xVzQRLcgQk4/m/L_5uSjSCAgAJ

I was hoping to leverage one in order to have a mid-traversal change to a different edge amongst a batch and leverage a composite index over edges that, at least for the first edge, starts off with g.E().has('edge_label', 'edge_property', 'edge_value').where(outV().has(... and then later go a pivot to a later .E() step like you can do with a .V().

Doing g.V().has('out_v_label', 'out_v_property', 'out_v_property_value').outE('edge_label).has('edge_property', 'edge_value') in comparison is significantly slower. Local testing seems to be 6ms for the g.E()... traversal compared to 121ms for the g.V()... pathway.

The vertex in question has about 21k edges coming off it, so it's not surprising going from the vertex (which out_v_property is also indexed) and checking for a match among the properties of 21k edges is significantly slower than instead of just "starting" on the matching edge based on a property that's indexed directly.5 replies

JJanusGraph

•Created by criminosis on 11/30/2023 in #questions

Janusgraph Tokenizer & Solr

I recently encountered what I believe is an incompatibility between the JanusGraph tokenizer being applied to queries before their submission to Solr. It appears this is uniquely only done to Solr in comparison to Elasticsearch. Moreover only for one particular predicate for Solr. Has anyone else bumped into this?

Here's a link to my post on the listserve that gives more detail and links to the code in question:

https://lists.lfaidata.foundation/g/janusgraph-users/message/6760

5 replies

JJanusGraph

•Created by criminosis on 11/14/2023 in #questions

Splitting Backing ElasticSearch Index To Increase Primary Shards As JG Mixed Index Grows

Has anyone had to resize a ElasticSearch index that's backing a JanusGraph Mixed Index?

Configuration wise it seems you're only able to convey to JanusGraph a singular primary shard & replica count when it creates an ES Index.

I'm projecting to eventually have a couple Mixed Indices exceed what will be reasonable for a single primary shard in the backing ES Index (given the rule of thumb of 10-50GB or 200M documents). So as a configuration default it makes sense to leave it as 1 for the other Mixed Indices.

Just wondering if anyone else has tackled this for heftier mixed indices. In my particular use case it's largely being inflated due to wanting to leverage the full-text search over text documents that are themselves stored as vertex properties in my graph.

I think what I'd need to do would be:

1. Set the backing ES indices as read only (so make sure any JG uses at that time aren't going to try to mutate, maybe best to just turn JG off)

2. Invoke the ES split API, giving the post split indices a novel name

3. After the split completes, drop the original ES Index and assign the post split ES indices an alias of the original ES index's name. Probably would want JG definitely off for this.

4. Turn JG back on

Ideally this problem would be handled using ILM with an alias that JG expects and have policy in place that'd automatically roll the indices as they grow, but given only the latest index instance is allowed to receive writes though I think will cause problems if JG tries to mutate a document in a prior ES index since JG doesn't know anything about the index rolling so it won't be able to send the mutate command to particular instance through the Index API.

But it got me wondering if the name that JG presumably expects is an actual index is silently converted to an alias without its awareness would also cause issues?

2 replies

ATApache TinkerPop

•Created by criminosis on 8/16/2023 in #questions

VertexProgram filter graph before termination

I have a VertexProgram that operates on vertices of type A and B.

B vertices are "below" A vertices.

The VertexProgram aggregates stuff about the underlying B vertices into their common parent A vertex.

I've successfully done the aggregation, but now I want to filter the A vertices that don't pass a predicate about the aggregated property they've accumulated based on messages from their underlying B vertices.

I was thinking of having a follow-up state machine for the VertexProgram like the ShortestPath vertex program does when it does its aggregations to filter any vertices that aren't of label A or are label A but fail the predicate check upon their aggregated value.

I tried doing this via

.drop() after returning true from the Feature's requiresVertexRemoval() . However it seems SparkGraphComputer doesn't support this feature.

I've been able to flatten the identifiers of A vertices using a follow-up map reduce job chained with the VertexProgram but was just wondering if maybe there's something else I'm missing?

Being able to return a filtered view of the graph following a VertexProgram's execution would be nice without having to flatten IDs via a trailing MapReduce job writing a Set to the Memory object.5 replies

JJanusGraph

•Created by criminosis on 7/3/2023 in #questions

Case Insensitive TextRegex?

Is there a way to convey a text insensitivity to a

textRegex Regex without doing a per character index enumeration of casing?

E.g. I'd normally make a regex like "(?i)foobar" (or pass to an explicit flags parameter in the regex builder) to match FOOBAR, Foobar, etc. But it seems like I have to do textRegex("[Ff][Oo][Oo][Bb][Aa][Rr]") to proxy that functionality?7 replies