Gary, el Pingüino Artefacto

Explore posts from serversDTDrizzle Team

•Created by Gary, el Pingüino Artefacto on 5/2/2025 in #help

Weird typescript error in a monorepo (pnpm with turborepo)

Argument of type 'PgTableWithColumns<{ name: "users"; schema: undefined; columns: { id: PgColumn<{ name: string; tableName: "users"; dataType: "string"; columnType: "PgUUID"; data: string; driverParam: string; notNull: true; hasDefault: true; ... 6 more ...; generated: undefined; }, {}, {}>; ... 8 more ...; accessCodeSentTo: PgColumn...' is not assignable to parameter of type 'SQL<unknown> | Subquery<string, Record<string, unknown>> | PgViewBase<string, boolean, ColumnsSelection> | PgTable<TableConfig>'.

Type 'PgTableWithColumns<{ name: "users"; schema: undefined; columns: { id: PgColumn<{ name: string; tableName: "users"; dataType: "string"; columnType: "PgUUID"; data: string; driverParam: string; notNull: true; hasDefault: true; ... 6 more ...; generated: undefined; }, {}, {}>; ... 8 more ...; accessCodeSentTo: PgColumn...' is not assignable to type 'PgTable<TableConfig>'.

The types of '.config.columns' are incompatible between these types.

Type '{ id: PgColumn<{ name: string; tableName: "users"; dataType: "string"; columnType: "PgUUID"; data: string; driverParam: string; notNull: true; hasDefault: true; isPrimaryKey: true; isAutoincrement: false; ... 4 more ...; generated: undefined; }, {}, {}>; ... 8 more ...; accessCodeSentTo: PgColumn<...>; }' is not assignable to type 'Record<string, PgColumn<ColumnBaseConfig<ColumnDataType, string>, {}, {}>>'.

Property 'id' is incompatible with index signature.

Type 'PgColumn<{ name: string; tableName: "users"; dataType: "string"; columnType: "PgUUID"; data: string; driverParam: string; notNull: true; hasDefault: true; isPrimaryKey: true; isAutoincrement: false; hasRuntimeDefault: false; enumValues: undefined; baseColumn: never; identity: undefined; generated: undefined; }, {}, ...' is not assignable to type 'PgColumn<ColumnBaseConfig<ColumnDataType, string>, {}, {}>'.

The types of 'table..config.columns' are incompatible between these types.

I cut here cuz max length of discord

3 replies

DTDrizzle Team

•Created by Gary, el Pingüino Artefacto on 5/2/2025 in #help

Drizzle-kit generate is failing when package.json is "type": "module".

Hi' I have drizzle set up in a monorepo and the package.json has "type": "module", so the imports looks like ".../../some-file.js". And the generate command fails 😦

cd packages/databases && pnpm generate

@repo/[email protected] generate <fake>\packages\databases drizzle-kit generate --config ./src/config.tsReading config file '<fake>\packages\databases\src\config.ts' Error: Cannot find module './files-model.js' Require stack: - <fake>\packages\databases\src\models\tenants-model.ts - <fake>\node_modules.pnpm\[email protected]\node_modules\drizzle-kit\bin.cjs at Function.<anonymous> (node:internal/modules/cjs/loader:1249:15) at Module._resolveFilename (<fake>\node_modules.pnpm\[email protected]\node_modules\drizzle-kit\bin.cjs:16751:40) at Function._load (node:internal/modules/cjs/loader:1075:27) at TracingChannel.traceSync (node:diagnostics_channel:315:14) at wrapModuleLoad (node:internal/modules/cjs/loader:218:24) at Module.require (node:internal/modules/cjs/loader:1340:12) at require (node:internal/modules/helpers:141:16) at Object.<anonymous> (<fake>\packages\databases\src\models\tenants-model.ts:6:32) at Module._compile (node:internal/modules/cjs/loader:1546:14) at Module._compile (<fake>\node_modules.pnpm\[email protected]\node_modules\drizzle-kit\bin.cjs:14260:30) { code: 'MODULE_NOT_FOUND', requireStack: [ '<fake>\packages\databases\src\models\tenants-model.ts', '<fake>\node_modules\.pnpm\[email protected]\node_modules\drizzle-kit\bin.cjs' ] }

1 replies

DTDrizzle Team

•Created by Gary, el Pingüino Artefacto on 4/26/2025 in #help

Suggestion for the Caching API

Hi, I was playing a bit with the upstash cache and it's very usefull for catalog tables. For example: categories, tags, countries, etc. But when it comes to filtered queries, it would be cool to cache by some column. For example: cache queries by userId in the posts table. And then invalidate by the userId.

May be this is already possible and I didn't see it.

4 replies

CDCloudflare Developers

•Created by Gary, el Pingüino Artefacto on 3/23/2025 in #workers-help

Caching for Workers

Hi, I set up a custom and simple worker to allow access for public files but deny access for user uploaded files.

But for every time a request is made, a worker runs. I'm trying to cache the file for 1 hour to avoid the invocation but it doesn't seems to work. Enabling public access via a custom domain isn't an option because I don't want user uploaded to be accessed.

Thanks 🙂

7 replies

Typing nested controlled

Hi, how can I get this param typed?

I know this is not recommended https://hono.dev/docs/guides/best-practices but I have a 1000+ nested controlled that I need to refactor

9 replies

TTCTheo's Typesafe Cult

•Created by Gary, el Pingüino Artefacto on 2/24/2025 in #questions

Migrating from a Create Next App to TurboRepo

Hi, does someone have an extensive guide or video of how to migrate to TurboRepo? I'm new in TurboRepo and I'm kinda lost. I read some blogs but they only move the entire folder into an app and I'm not sure if this is right. What happends then to the prettier/eslint/other config files?

4 replies

DTDrizzle Team

•Created by Gary, el Pingüino Artefacto on 2/19/2025 in #help

mapWith not getting called on extras

Hi

1 replies

DTDrizzle Team

•Created by Gary, el Pingüino Artefacto on 1/3/2025 in #help

OpenTelemetry?

Hi, it's there way to enable OpenTelemetry on drizzle? I found some comments in this discord but couldn't find a way

2 replies

DTDrizzle Team

•Created by Gary, el Pingüino Artefacto on 11/28/2024 in #help

Recommended way for managing Postgres functions

Hi, I need to create multiple Postgres Functions, and they are very likely to change multiple times in the following days/weeks.

I read that the recommended way is to create an empty migration file and add the sql there. That means for every change, I would need to create a new empty migration file.

I was time about creating them on a directory with raw .sql files and creating them every time the project starts. With this way, a migration file isn't needed.

Is this a good idea or may be go with the empty migration?

1 replies

DTDrizzle Team

•Created by Gary, el Pingüino Artefacto on 11/16/2024 in #help

Brand ids?

Hi, it's possible to mapped a primary key to a branded type from effect?

9 replies

DTDrizzle Team

•Created by Gary, el Pingüino Artefacto on 11/1/2024 in #help

Conditional batch on Neon Batch API?

Hi, it's possible to do something like:

3 replies

DTDrizzle Team

•Created by Gary, el Pingüino Artefacto on 10/14/2024 in #help

Applying drizzle migrations on Vercel with Hono

Hi, I'm trying to apply that migrations on the directory like this:

I'm using Nextjs, deployed on Vercel with a hono api. The path is /api/hono/tenants/create.

But every time I get this error:

Error: Can't find meta/_journal.json file

at /var/task/.next/server/app/api/hono/[[...route]]/route.js:2467:42887

at qp (/var/task/.next/server/app/api/hono/[[...route]]/route.js:2467:43323)

at /var/task/.next/server/app/api/hono/[[...route]]/route.js:2467:44022

at process.processTicksAndRejections (node:internal/process/task_queues:95:5)

at async o (/var/task/.next/server/chunks/636.js:67:10412)

at async Function.P [as begin] (/var/task/.next/server/chunks/636.js:67:9981)

at async qf (/var/task/.next/server/app/api/hono/[[...route]]/route.js:2467:43460)

I have tried absolute urls, relative, using path.join, etc.

Thanks for the help 😄

2 replies

TTCTheo's Typesafe Cult

•Created by Gary, el Pingüino Artefacto on 10/14/2024 in #questions

Applying drizzle migrations with Hono

Hi, I'm trying to apply that migrations on the directory like this:

I'm using Nextjs, deployed on Vercel with a hono api. The path is /api/hono/tenants/create.

But every time I get this error:

Error: Can't find meta/_journal.json file

at /var/task/.next/server/app/api/hono/[[...route]]/route.js:2467:42887

at qp (/var/task/.next/server/app/api/hono/[[...route]]/route.js:2467:43323)

at /var/task/.next/server/app/api/hono/[[...route]]/route.js:2467:44022

at process.processTicksAndRejections (node:internal/process/task_queues:95:5)

at async o (/var/task/.next/server/chunks/636.js:67:10412)

at async Function.P [as begin] (/var/task/.next/server/chunks/636.js:67:9981)

at async qf (/var/task/.next/server/app/api/hono/[[...route]]/route.js:2467:43460)

I have tried absolute urls, relative, using path.join, etc.

Thanks for the help 😄

3 replies

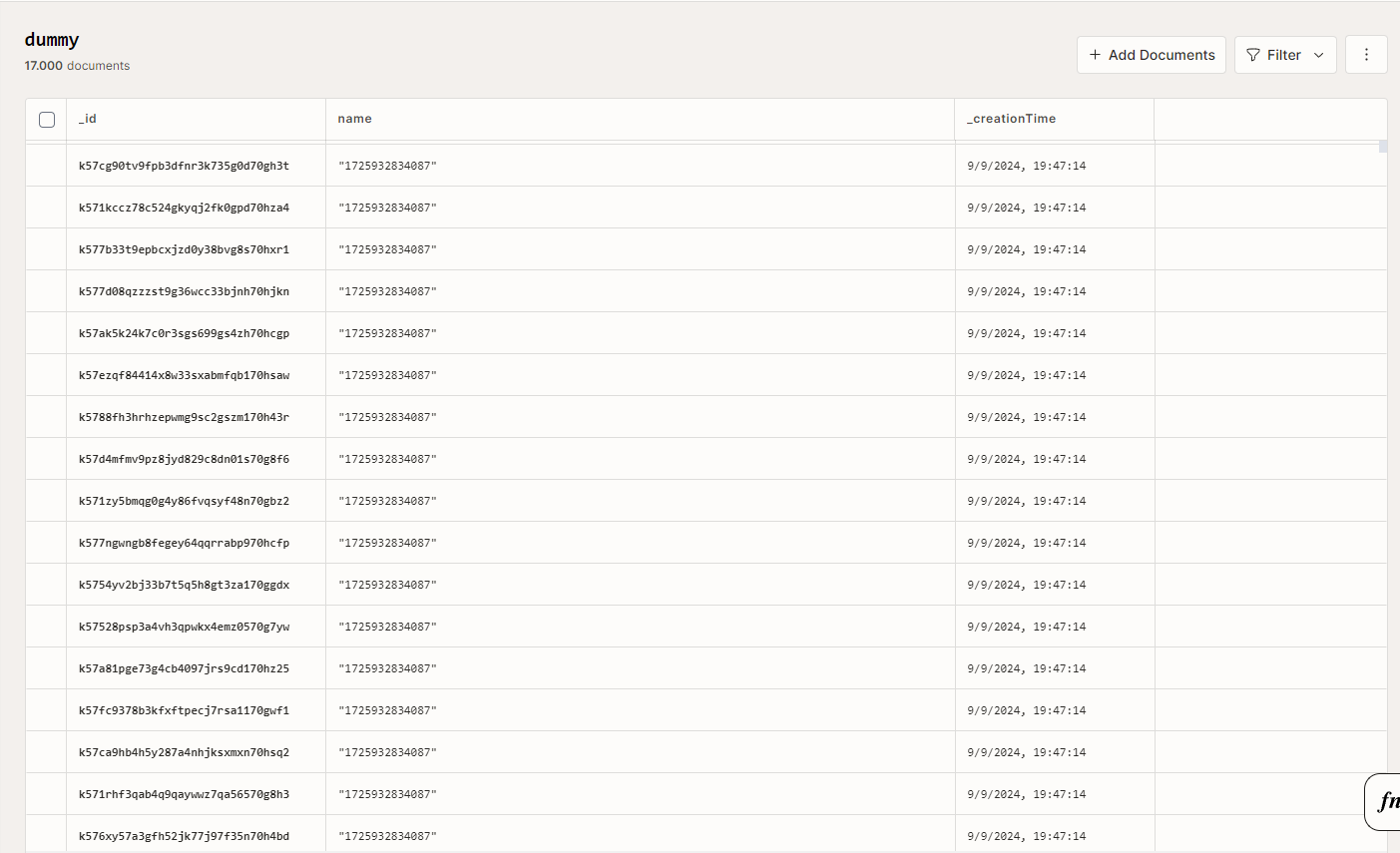

CCConvex Community

•Created by Gary, el Pingüino Artefacto on 9/10/2024 in #support-community

How to aggregate documents (more than 16K)?

21 replies

CCConvex Community

•Created by Gary, el Pingüino Artefacto on 9/8/2024 in #support-community

The filter helper is limited by the 16384 documents scanned?

2 replies

CCConvex Community

•Created by Gary, el Pingüino Artefacto on 8/28/2024 in #support-community

Spamming function calls on stream OpenAI responses

Hi, I was looking at the convex-ai-chat repo and found this

https://github.com/get-convex/convex-ai-chat/blob/main/convex/serve.ts#L70

Isn't a function trigger every time the a new token gets streamed?

2 replies

TTCTheo's Typesafe Cult

•Created by Gary, el Pingüino Artefacto on 8/9/2024 in #questions

Extending React Markdown with any component

Hi, I'm trying to implement charts into the markdown langauge. Currently, I'm using Mermaid for some basic charts but I want to implement my own.

For example:

This markdown code will render a pie chart using mermaid.

How can I implement something to render my own react components?

I know mermaid is open source and I could look into the code, but I want to first ask is someone knows an easier way.

Also, I will be could to implement any react component because some cool functionally will be unlock.

2 replies