IcyyDicy

Explore posts from serversTTCTheo's Typesafe Cult

•Created by IcyyDicy on 1/6/2025 in #questions

Architecting a task queue

10 replies

TTCTheo's Typesafe Cult

•Created by IcyyDicy on 1/6/2025 in #questions

Architecting a task queue

Gotcha, thanks!

10 replies

TTCTheo's Typesafe Cult

•Created by IcyyDicy on 1/6/2025 in #questions

Architecting a task queue

whoops, I guess I shouldn't do replies in threads

10 replies

TTCTheo's Typesafe Cult

•Created by IcyyDicy on 1/6/2025 in #questions

Architecting a task queue

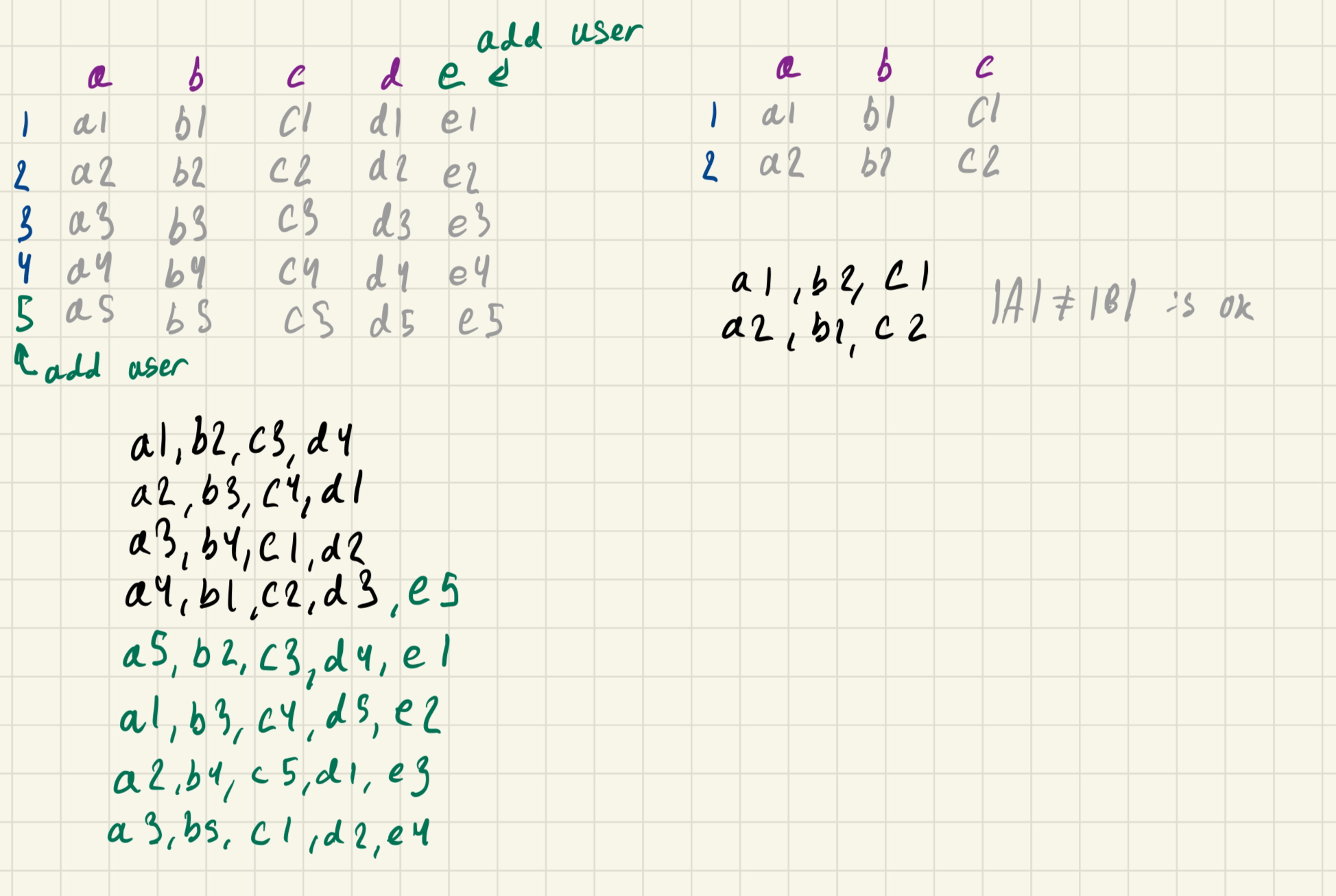

- How many people: Likely about 30 people

- Threading: I'm most comfortable with JS/TS, Python, Java, and I'm getting C++ up to speed. I haven't done anything with threading myself yet but I have seen some code on how it would be like to do.

- Why multiple machines: As we're aiming to have this event in-person and we need to have a somewhat reasonable result time for any new user/system prompts submitted, I assumed that a single a single machine would not be able to keep up with that many prompts.

number of user prompts * number of system prompts is quite ambitious 😅

No concrete plans on how to split up who submits what type of prompt yet, but if we were to have one half of people submit user prompts and the other half system prompts, we'd get 15 * 15 = 225 total LLM chats. If we ask for people to pair up we can get that down to ~49 LLM chats.

I don't think most would want to wait to get the results of their prompts against all of the other prompts so I think a reasonable guess at the throughput would be 2-4 results per user/system prompt per minute (30 to 60 LLM chats per minute, 15 to 30 chats per minute if people pair up)10 replies