Architecting a task queue

Heyo! So me and some university buddies want to host an LLM prompt engineering event where people try to steal or prevent the theft of the password in the hidden system prompt. "The vision" is to have everyone's user prompts go up against everyone's system prompts and have everyone iterate to get better and better prompts.

To boil this down, after some person submits a new system prompt, the system should queue up LLM tasks to run that system prompt against other people's user prompts. Same thing but vice versa for any new user prompts. Of course, it should drop any queued tasks that don't have the latest prompts.

We're planning on architecting this using three parts:

- The front end that will take in the two types of prompts and display how they worked (NextJS)

- The back end to match up system prompts and user prompts and send them out to available LLM workers (Plain old Node + Express)

- Self hosted LLM workers (Multiple, separate computers!) (LocalAI , The computer lab has the compute available already and we don't want to pay for chatgpt tokens :p )

My question is, what would be a good way to glue all of this together? I'm not too worried about implementing some sort of self-cleaning priority queue, but more about how do I get all of these parts talking to each other nicely.

Should I just have the front end send the prompts to the back end and occasionally ping it for results? Or should I have the front end push things to something like a postgres database, have it watch the DB for results, while the back end also looks for changes in the database, and manages the queue based on the changes it sees? Or is there something completely different that people use for use cases like this?

Thanks for reading my ramblings, any help will be greatly appreciated!

Solution: Jump to solution

Jump to solution

Just to wrap this thread up in case someone comes across it in the future, I ended up choosing this:

tldr: No queues and a postgres DB as the glue

- just dump user and system prompts into a postgres database from the frontend and keep track of the latest of each prompt for that user...

7 Replies

I'll ask the obvious questions:

- How many people are you expecting to use this?

- Assuming the prior answer isn't "oh god, this is overwhelming", do you know any languages that can use threads?

- Is there a reason why you desire this on multiple machines?

I ask these questions to get you think if you actually need all this infrastructure. If you can keep all this confined to one binary, it will save you a shit ton of headache.

- How many people: Likely about 30 people

- Threading: I'm most comfortable with JS/TS, Python, Java, and I'm getting C++ up to speed. I haven't done anything with threading myself yet but I have seen some code on how it would be like to do.

- Why multiple machines: As we're aiming to have this event in-person and we need to have a somewhat reasonable result time for any new user/system prompts submitted, I assumed that a single a single machine would not be able to keep up with that many prompts.

number of user prompts * number of system prompts is quite ambitious 😅

No concrete plans on how to split up who submits what type of prompt yet, but if we were to have one half of people submit user prompts and the other half system prompts, we'd get 15 * 15 = 225 total LLM chats. If we ask for people to pair up we can get that down to ~49 LLM chats.

I don't think most would want to wait to get the results of their prompts against all of the other prompts so I think a reasonable guess at the throughput would be 2-4 results per user/system prompt per minute (30 to 60 LLM chats per minute, 15 to 30 chats per minute if people pair up)Couldn't find command 'many people: Likely about 30 people

- Threading: I'm most comfortable with JS/TS, Python, Java, and I'm getting C++ up to speed. I haven't done anything with threading myself yet but I have seen some code on how it would be like to do.

- Why multiple machines: As we're aiming to have this event in-person and we need to have a somewhat reasonable result time for any new user/system prompts submitted, I assumed that a single a single machine would not be able to keep up with that many prompts.

number of user prompts * number of system prompts is quite ambitious 😅

No concrete plans on how to split up who submits what type of prompt yet, but if we were to have one half of people submit user prompts and the other half system prompts, we'd get 15 * 15 = 225 total LLM chats. If we ask for people to pair up we can get that down to ~49 LLM chats.

I don't think most would want to wait to get the results of their prompts against all of the other prompts so I think a reasonable guess at the throughput would be 2-4 results per user/system prompt per minute (30 to 60 LLM chats per minute, 15 to 30 chats per minute if people pair up)'whoops, I guess I shouldn't do replies in threads

Funny bot

I would still run some tests first w/ resource utilization and if need be, I'd just throw a worker behind a queue and be done w/ it.

Gotcha, thanks!

Solution

Just to wrap this thread up in case someone comes across it in the future, I ended up choosing this:

tldr: No queues and a postgres DB as the glue

- just dump user and system prompts into a postgres database from the frontend and keep track of the latest of each prompt for that user

- backend periodically gets all of the users and the prompts they have from the database

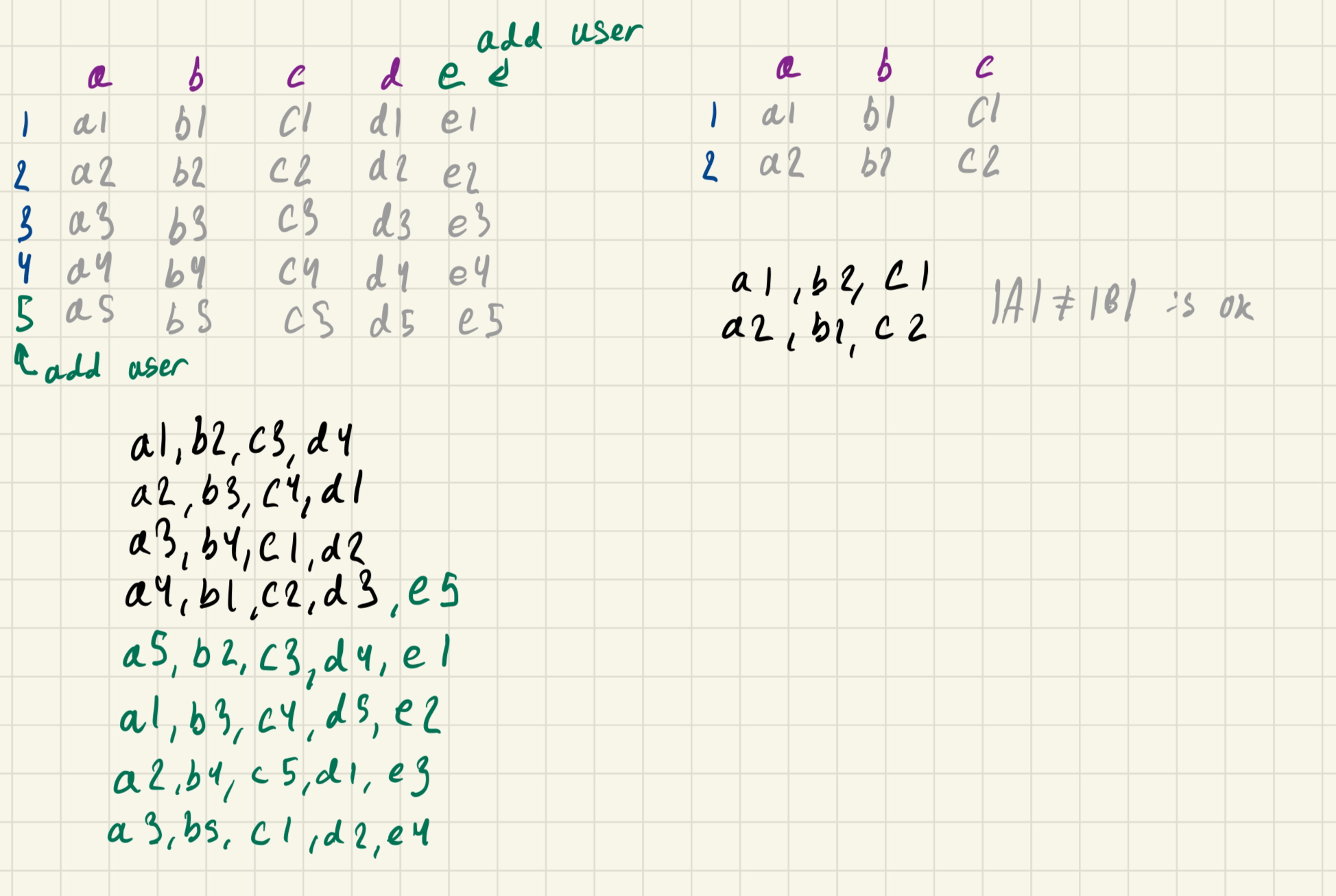

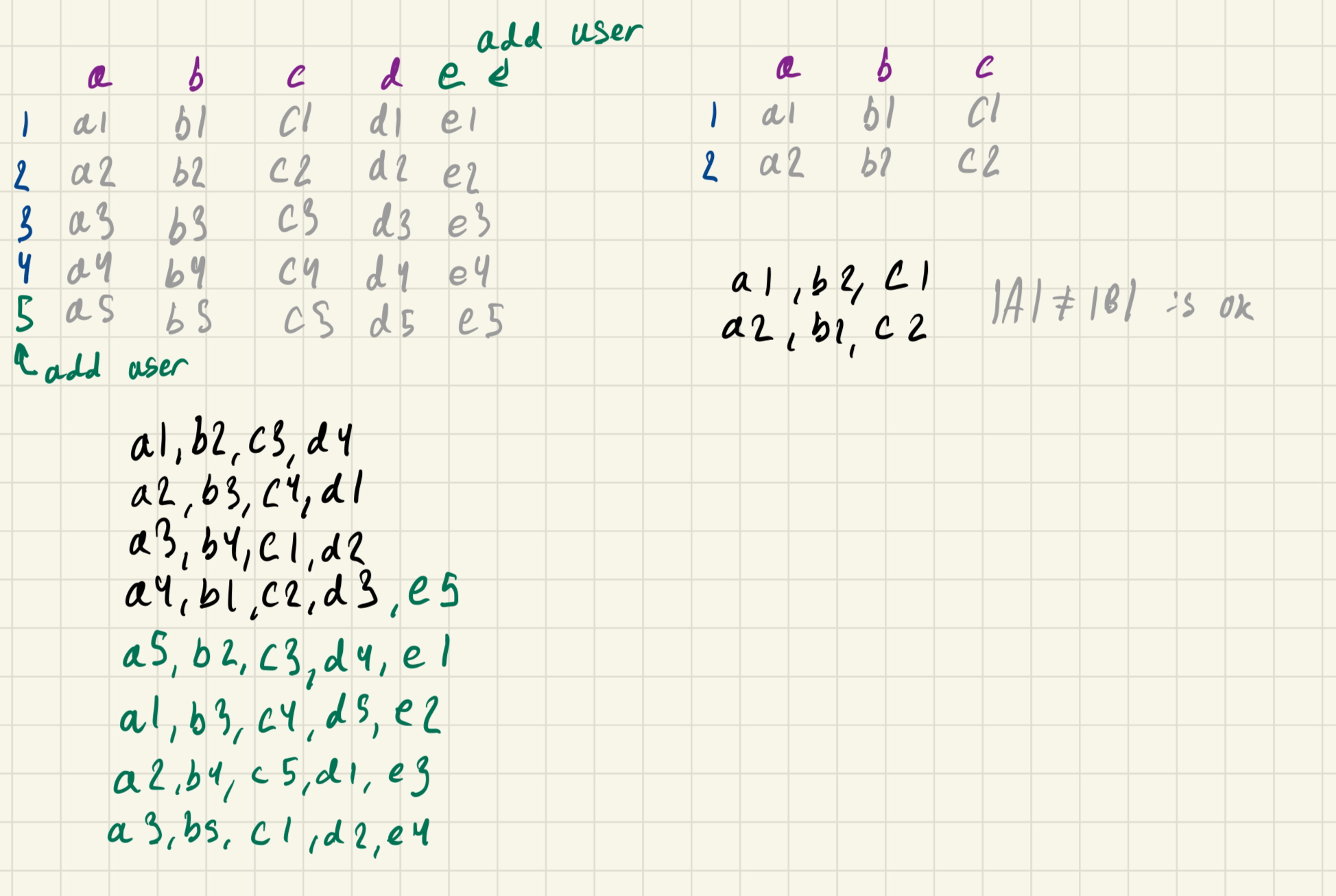

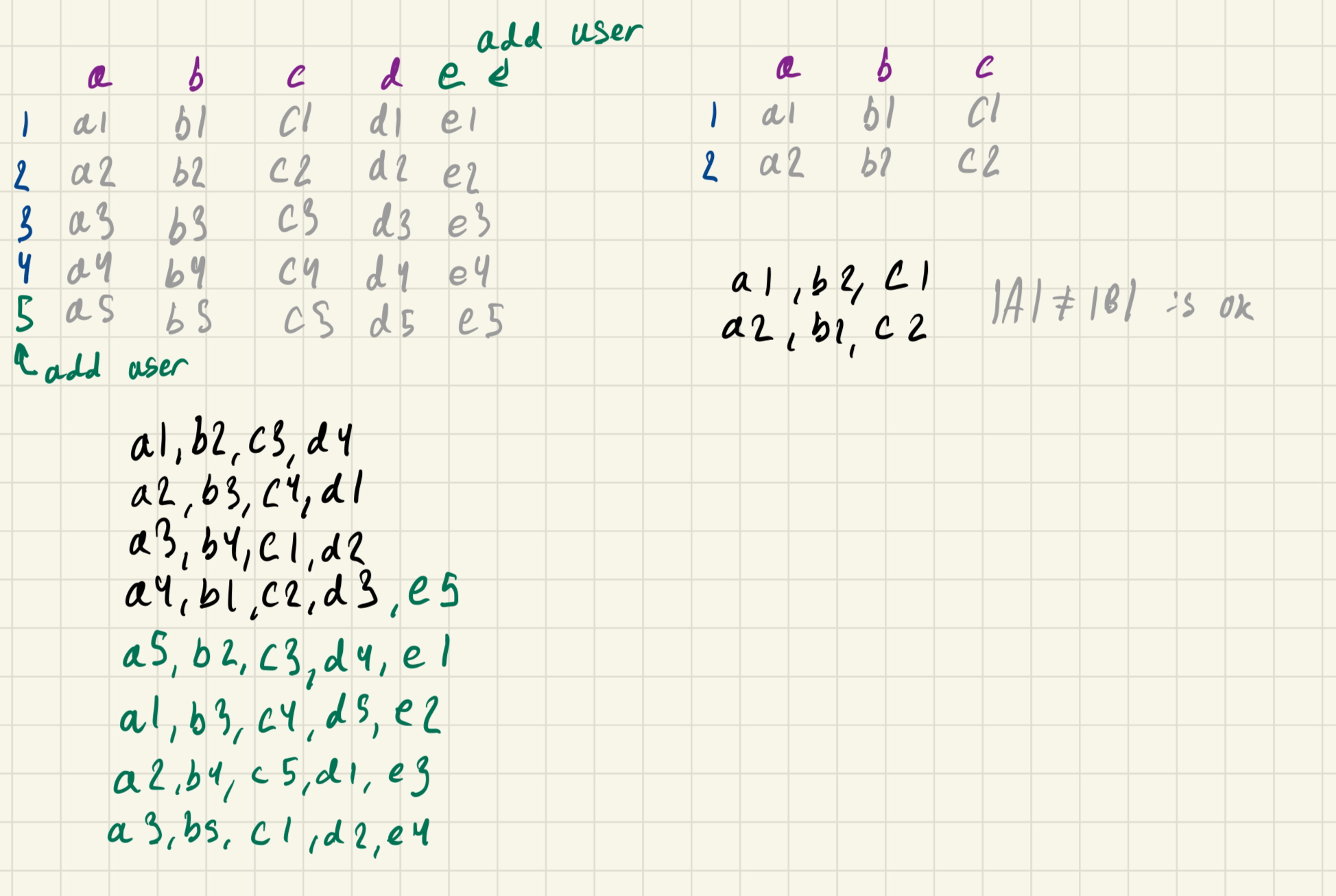

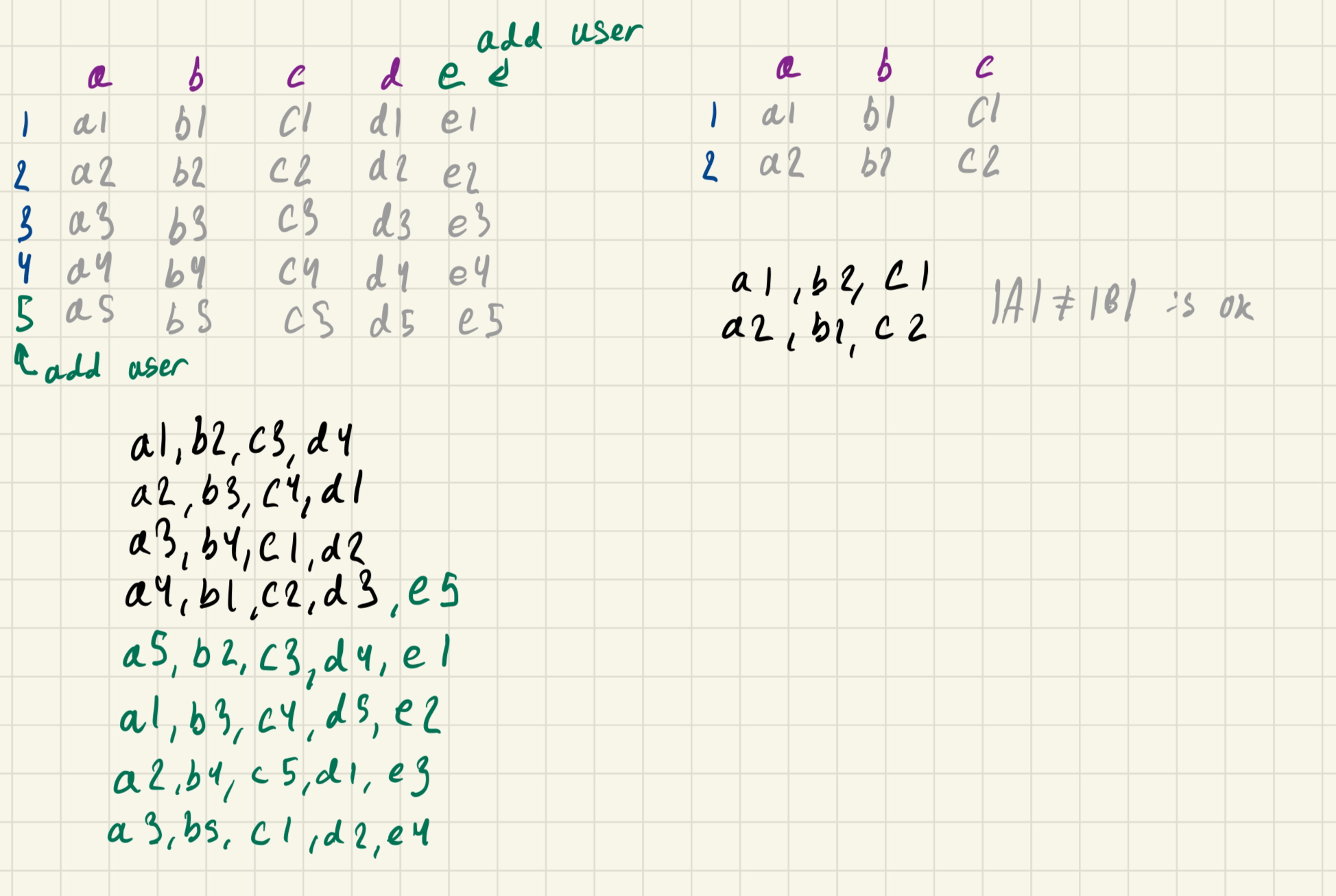

- If we think of all the combinations of user and system prompts as a matrix we can easily pair them up with a diagonal, so everyone's prompts get paired up equally (so we don't have one person's prompt getting all the attention while someone else's gets ignored)

- once we're done processing that diagonal just move everything one down and wrap around as needed

- If someone joins mid event, extend the diagonal we already have

- If someone didn't put in one of their prompts yet (the matrix isn't square) we just wrap around that diagonal and things still work

- We also store results after pairing up the prompts so we would skip a combination if we already have its result