List of SIMD bug

The following simple code doesn't work, is this because of memory? I find the documentation is lacking, it only says SIMD are restricted to powers of 2, which I compy with...

46 Replies

Looks like a bug. Could you please file an issue on Github?

sure

Thanks

To be clear, I don’t think this code should work, it’s just maybe the compiler can fail more gracefully

It looks like it's trying to constant fold the loop.

Thanks for the responsiveness! it isn't clear to me from the docs why the memory is not enough for SIMD (while for a pointer it is enough)

This is blowing up at compile time, not runtime.

My guess is that a bunch of copies of the matrices are being made as part of optimizations, and you're overflowing some limit somewhere.

so it wasn't my fault? surprising...

This also has the issue:

Even with the optimizer turned off.

I don’t think people should use simd like this though

It doesn't matter, the compiler is blowing up in a bad way here.

The requested size is

0xffffffffffff8000It does. Compiler passes have certain assumptions made wrt the thing it tries to do

Or put it another way, a upper limit should be set

this makes sense, but if the docs don't set what the intended purpose of SIMD is, you'll get more people like me

If I run that via 2's complement, it comes to 32768 (0x8000), which is a reasonable arena size.

SIMD should be able to at least kind-of handle.

Some of this is on Modular for advertising SIMD as equivalent to

np.array.If it generates bad code, then no

but SIMD is not array

it suppose to be a type you can roughly pass by reg, which chris said somewhere here

I mean it shouldn't crash, not that it should generate optimal code for this.

Probably an "oversized SIMD" lint is in order.

I mean it shouldn't even try to generate code for this. the passes that give you the most performance are usually also the passes that have very very bad asymptotic behaviour. So certain artifitial bound needs to be put on the problem size

My guess is just the compiler engineer just got lazy and roll with though assumptions

Kinda like how they handled UB

Another example: our

InlineArray type will always unroll the v in a check

the compile time will blow up and possibly end up in OOM or something similarReally what needs to happen is for

@register_passable to let you specify some formula for when it isn't any more, such as @register_passable(< simdbitwidth() * 4).We still did it because we don't know how to control unroll factor in the stdlib

There is a point past which you blow your icache and unrolling is no longer wanted.

I'm not even talking about the quality of generated code, just the fact that we are trying to generate code in this scenario is already bad

I think the simd problem first and foremost is this case. We won't reach the point to even talk about generated code, the IR is already too big to process

We effectively want an "@unroll_count()".

If you have enough microarchitectural information in sys.info, you can get the rest from there.

To help with the

InlineArray problem, yes. But for SIMD, i don't know what's needed. Any pass can be the place where the bloat happnsWhat I'm considering is that, given branch predictors are generally pretty good, there aren't many reasons to unroll more than 16x the number of vector ALUs in a CPU.

What I know is that we used to have

@unroll(n) where n is a unroll factorFor a BIG cpu, I don't see more than 4 vector ALUs happening.

I think that mapped to unroll_count inside of LLVM.

You can always do it manually, but it's not great.

And makes the code messy.

That's very fair. And I was confused in the beginning as well. Would you please also mention that in the issue? Thanks a lot!

However, the fact that large SIMD instances run out of memory even without loops is an issue.

Which is exactly why we moved away from it, IIUC. such a count is a hint to LLVM, but i think they want to do it entirely or almost entirely deterministically in Mojo itself. I think you can find recode of this in the change log

That is perfectly good.

But, missing the feature is not great.

We will have that back some day in the future I believe

I think Mojo needs something like FlexSIMD which doesn't have the "power of 2" restriction and uses kernels that self-tune based on compile-time info to process in blocks.

That can get treated like numpy arrays instead of mapping directly to hardware.

I don't think that's supported by LLVM though?

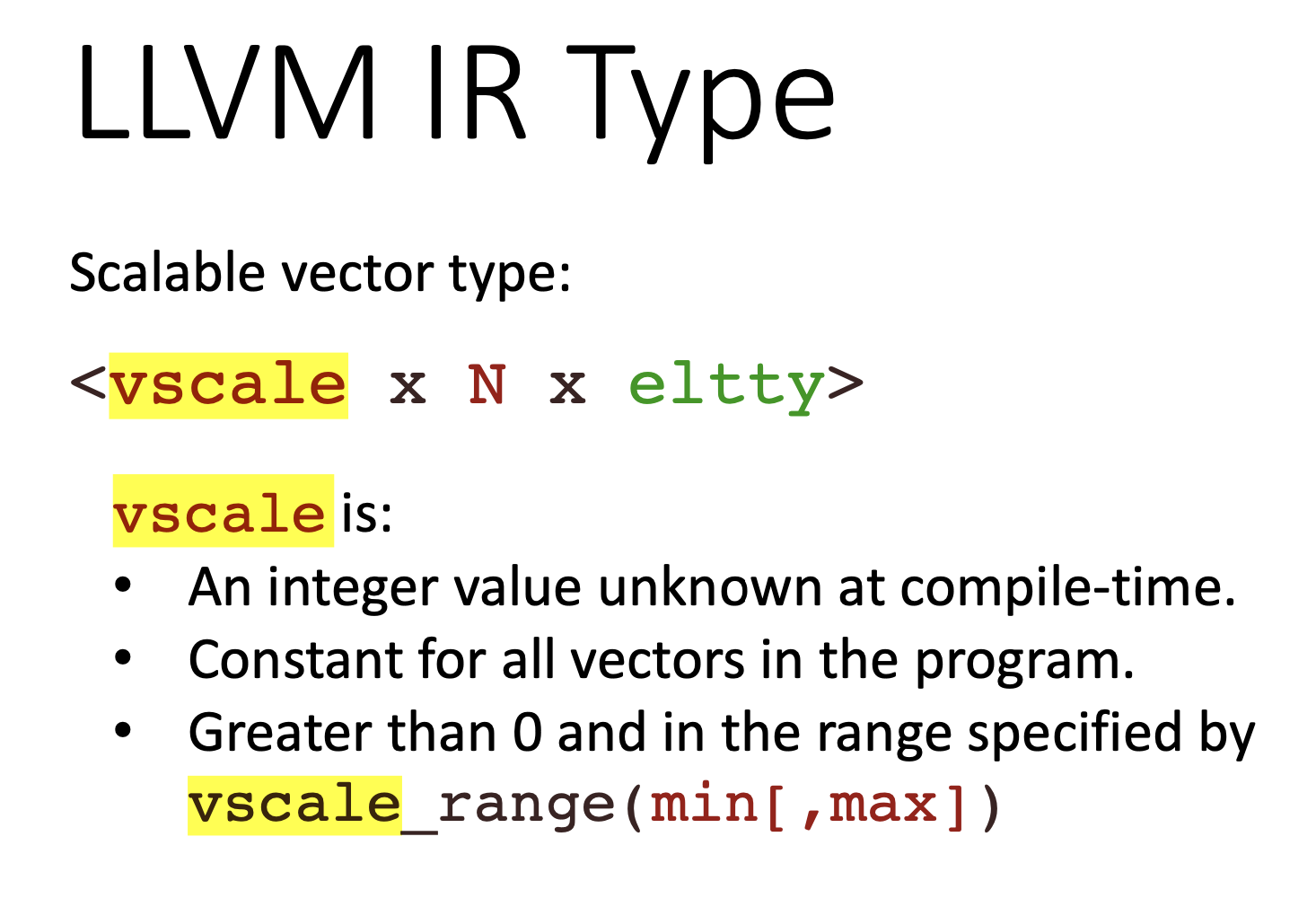

SIMD lowers to

<n x type> which is incapable of representing thatan equivalent to np ndarray would be very nice, ndbuffer is not too intuitive

FlexSIMD would be drain loops.

It's effectively going to be an

InlineArray[N, Scalar[dtype]], aligned to whatever is needed by the platform,

Then you write drain loops for everything.

This means no support for some of the more exotic operations like Galois Field Affine Transformations, but the basics should work fine.I was talking about the LLVM type. Just checked and my claim was wrong though, llvm seems to support

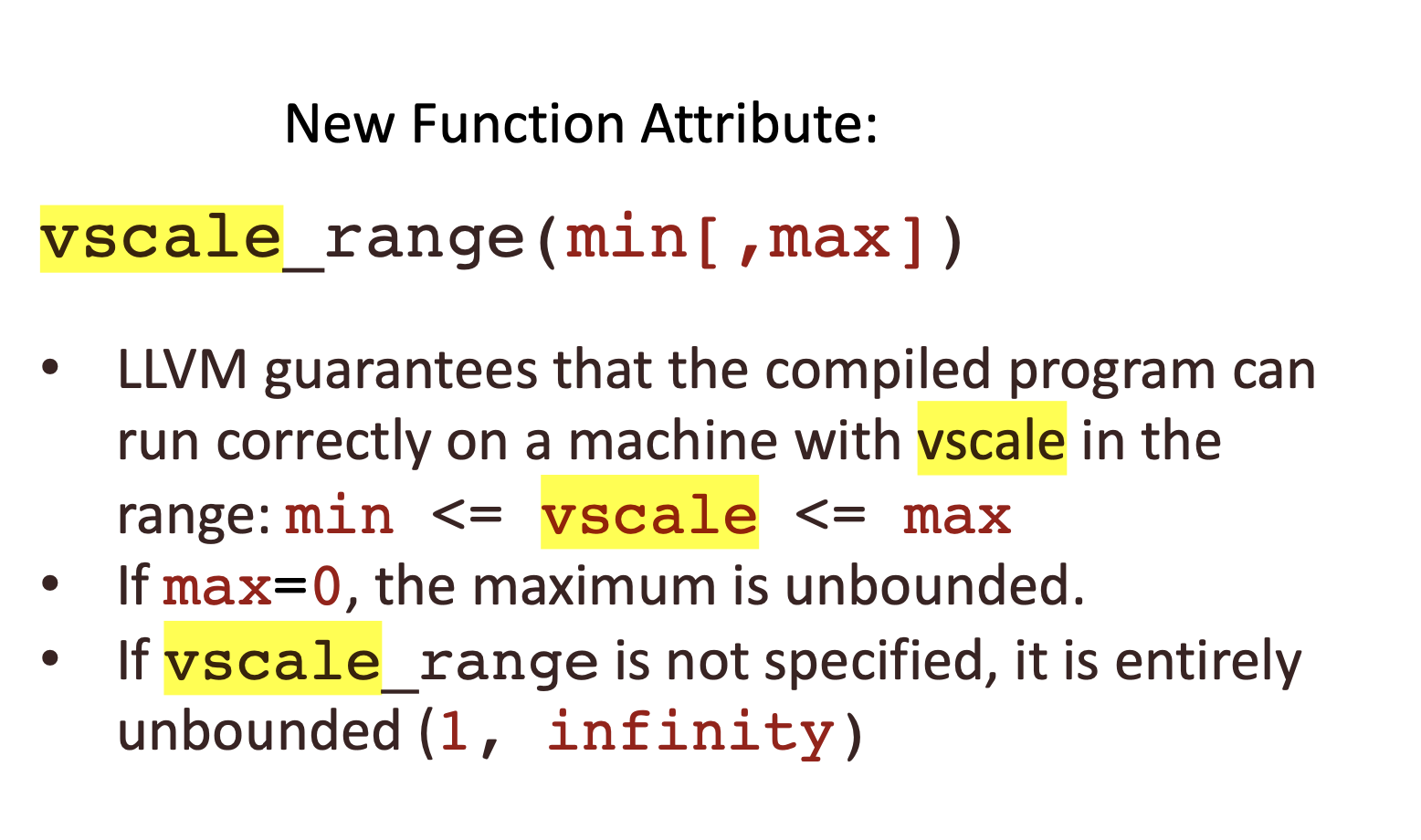

<vscale x n x i32> types where vscale is not burned into the IRiirc vscale is part of the architecture.

That's the vector width component of SVE and RSICVV.

https://discord.com/channels/1087530497313357884/1296578473493659688 I'll drop this new question here

Look like it's "just a number"

Is arm

vscale_range(1, 16)?

That looks a lot like information from the vector size register that ARM and RISCV have.

yea, since it has to lower to something concrete after ISel

But having this type representable gives me hope that we can support it in Mojo without much fuss

That is good, and something I've been after, but that still won't handle the "I have a massive array I want to do operations with" case.

If Mojo continue to advertise SIMD = np.array, people will expect to load large columnar datasets into a SIMD.

Yea, we should improve the doc

I am not sure about my answer but this what I think:

one variable of SIMD is like a hardware vector that you can use to do single instruction on multiple data, and the maximum value for one SIMD is upper bounded.

you can use check the value for your architecture using:

from sys.info import simdwidthof

alias simd_width = simdwidthofDType.int32

reagrding your code:

yes we can do somthing like SIMDtype=DType.float32, size=size. with size>>simd_width, but I think it's somthing that mojo handle without we know. "abstraction"

regarding your Matrix:

you can look at the matrix_mul_mojo_example from there webiste and look how they utilize the SIMD using the vectorize built in optimization.

also look at the parallelize to multiple processors....