❔ Whats the fastest way to write this data to a file? (C# Console App.)

I need to write an int[][] to disk. Each int[] has 2 values, and the full int[][] has 100mil entries. What would be the fastest method of writing this to disk?

35 Replies

StreamWriter for sure, this amount of data should be streamed

Perhaps there are some better, lower-level ways, but I'm unaware of themif each int[] has 2 values can it be a tuple instead? then you could at least get it all in one contiguous block of memory, though IO will probably be the bottleneck regardless

Shall i just combine them like such?

sw.WriteLine(xY[0] + ", " + xY[1]);if you want this to be fast you shouldn't even be touching strings

I thought so from testing 🤣, i just dont know any other way to combine them with a seperator.

the format is simple and consistent so you could just dump the bytes out into a file directly

well, you didn't specify any output format requirements so that will affect what solutions you can use

Ah my bad 😅.

I would prefer that each int[] is written on seperate lines, and each value is seperated with at least 1 non number character.

Ex:

then just get rid of the string concatenation and write each part of the line individually with streamwriter calls

so ?

like

obviously test to see which is actually faster, but this should avoid some string allocations

gets me

f.WriteLine($"{data[i][0]},{data[i][1]}"); gets 00:00:12.6447007

(these are very unscientific benchmarks)Yeah, run it through Benchmark.NET to get info about allocations and all that jazz

realistically disk write speed is gonna be more of a bottleneck than any micro-optimization here

Ah it worked in <1m 🙂 thank you!

Hahaha

Oh

those must be small ints, my output file with random numbers is about 2GB

They are x/y coords. min is -8000 for both, max is +8000

i wonder if parallelization would make it faster  SSD should be able to do 2.5GB/s write

can you even seek and write to the same file in parallel?

i guess it wouldn't even apply here since the line length is variable

SSD should be able to do 2.5GB/s write

can you even seek and write to the same file in parallel?

i guess it wouldn't even apply here since the line length is variable

SSD should be able to do 2.5GB/s write

can you even seek and write to the same file in parallel?

i guess it wouldn't even apply here since the line length is variable

SSD should be able to do 2.5GB/s write

can you even seek and write to the same file in parallel?

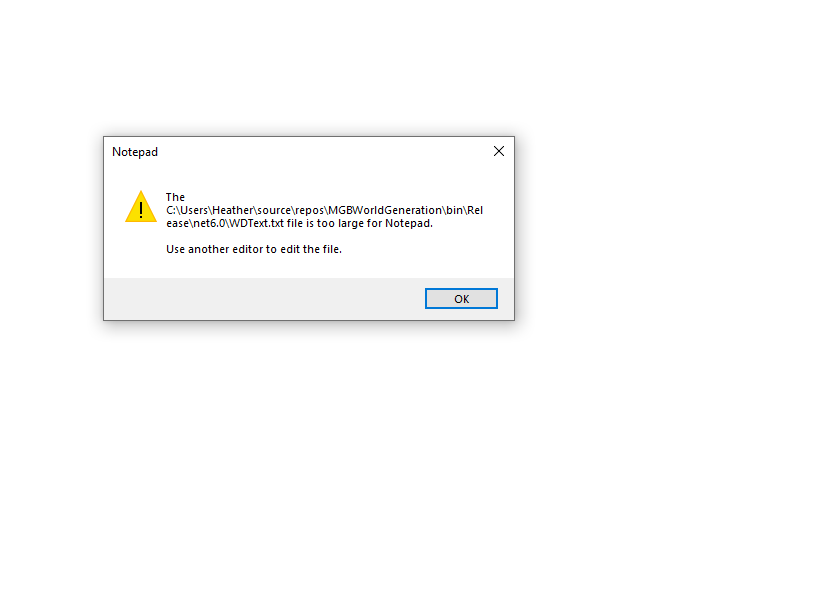

i guess it wouldn't even apply here since the line length is variablehttps://github.com/RolandPheasant/TailBlazer this is made for browsing logs, but also great for opening any text file that's too big for anything else. Can open tens of gigs of

.txt files no problemAh ty, i was just using VSC and it crashed 🤣

I don't think you can write to the same file from multiple threads

2 seconds  abandon text, embrace binary

if you used an array of tuples you could just dump the whole block of memory into a file

assuming you don't have any concerns about endianness

which knocks it down to 0.5 seconds on my machine and pretty closely matches the theoretical max write speed of my SSD

so the question is, is having it in text format (and a jagged array) worth it taking 5-20 times as long?

abandon text, embrace binary

if you used an array of tuples you could just dump the whole block of memory into a file

assuming you don't have any concerns about endianness

which knocks it down to 0.5 seconds on my machine and pretty closely matches the theoretical max write speed of my SSD

so the question is, is having it in text format (and a jagged array) worth it taking 5-20 times as long?

abandon text, embrace binary

if you used an array of tuples you could just dump the whole block of memory into a file

assuming you don't have any concerns about endianness

which knocks it down to 0.5 seconds on my machine and pretty closely matches the theoretical max write speed of my SSD

so the question is, is having it in text format (and a jagged array) worth it taking 5-20 times as long?

abandon text, embrace binary

if you used an array of tuples you could just dump the whole block of memory into a file

assuming you don't have any concerns about endianness

which knocks it down to 0.5 seconds on my machine and pretty closely matches the theoretical max write speed of my SSD

so the question is, is having it in text format (and a jagged array) worth it taking 5-20 times as long?Sure you can

However you need to be very careful to not corrupt the hell out of the file

stop this immediately

no

gotta go fast

actually i made a typo there didn't i

at least make it safe

i don't need no new fangled fancy memory apis

lol wtf is this

the same thing jimmacle did in that first code block

without using fixed/unsafe

i dont want to say that you should throw a database at it ... but you could throw a database at it 👀

like good luck loading the file or altering data in it

Auto flush to false, make buffer large enough, ???, flush, profit

batch to thousand queries and pump that in

bulkinsert

Was this issue resolved? If so, run

/close - otherwise I will mark this as stale and this post will be archived until there is new activity.