KV 2.0 Beta Sign Up

Hi all. I apologize for neglecting this channel for a while. We've been busy for the past little while on KV 2.0 and we're getting ready to start early beta testing if you're interested. There's some architectural changes that we're going to be describing later this year in a blog post but the high level summary of the benefits are:

1. Eventual consistency is a lot better bounded than it is today. We've observed worst case ~days for stale reads but now it should be closer to hours and we're working on cutting that down even further.

2. Read after write consistency should be nominally much closer to 1 minute or less globally even when you specify a long cacheTTL.

3. There may be some TTFB improvements. I'd ask you please provide feedback to this thread if you do see any changes as I'm curious what people are seeing on their end.

This is still an early implementation so there's lots of improvements still planned. My hope is that over the next few months we're able to reduce the read-after-write consistency into tens of seconds. This is also a first step in terms of substantially improving TTFB (I won't spoil the surprise just yet about what number I'm targeting).

Just to be clear. Everyone is going to get this. This is purely about having an opportunity to provide feedback early - much easier for us to investigate issues when there's only a handful of accounts on this :).

Please register interest by filling out https://forms.gle/d1yrVjxpQNFSYYYf6

Google Docs

KV 2.0 Beta Sign Up

KV 2.0 will be rolling out over the next month or so. Submit interest to get into an early beta before we turn this on globally.

149 Replies

Ahoy!

Re what TTFB improvements you might see.

You might actually see some TTFB improvements for cached reads scaling with the size of your object (small objects maybe not so much, larger objects more). The overall effort we're working on this year will indeed be focused on really cutting down the TTFB for cached reads more than cold reads (there's not much we can do with truly cold reads).

Certain kinds of cold reads will see a speedup but overall I wouldn't expect that significant a difference. The biggest benefit is that you can specify a long cache TTL but a write will still invalidate it globally within a bounded amount of time. This means you only need to set a shorter TTL if you have strict security reasons (e.g. caching a login token).

We're also going to be making some changes to convert latency "tail failures" to be availability issues instead. We see long tail latencies sometimes take 5 minutes (like 5-6 9s). We'll be adding much stricter timeouts. So overall aggregate cold reads TTFBs should come down but it's not necessarily going to be much comfort.

Again, you might see cold & hot read TTFB improvements. We just don't yet have any data and I am kind of curious what the new baseline would look like for end users (we'll have a better sense of impact once we shift over substantial amounts of traffic).

Any plans to add bulk ops support for bindings?

Unknown User•3y ago

Message Not Public

Sign In & Join Server To View

Bulk put definitely not. Is bulk delete at all interesting?

On bulk put, is that for reduced pricing for bulk puts, or regular puts in a single op?

Yes. I think it's kind of silly that people put the cache API in front of KV and I want to make sure that we fix the need to do that. I'm not yet sure what shape that will take though / if we'll do anything about it.

My understanding is that bulk puts are a convenience. You still get charged per object you throw into that JSON

And it's also partially to work around rate limiting using api.cloudflarestorage.com but there are architectural improvements we can make there to remove rate limiting altogether.

That’s fine. It’s just when you are puting a few hundred small values, it still seems a little inefficient to only 6 puts at once

Can you please clarify? The bulk API today supports 10k objects to write...

Yeah, it just seems like a hack, when you are already in a Worker and have the binding there

So you're asking for a convenience binding that does:

for you?

Never mind, I think I messed up my thinking

I combined two threads of thought in my head

I think it was something along the line of being able to have a Value that references another key. So basically being able to create an alias or symlink

Yeah. People have asked for symlinks on R2 as well.

It doesn’t even have to be a free read. Just that you don’t need two round trips to get a single value

I understand. The tricky part is figuring out how to define the semantics. e.g. what's the maximum traversal depth if someone does:

upload A

symlink B -> A

delete A

symlink A -> C

I think a depth of 1 probably knocks out the vast majority of use-cases.

Unknown User•3y ago

Message Not Public

Sign In & Join Server To View

@beelzabub Not sure if this is something KV 2.0 would allow for, but it would be nice to have larger metadata for an entry. Alternatively having the option to return values when listing would work as well (though I don't see this being feasible when values can be up to 25 MB).

it would be nice to have larger metadata for an entryWhat's the metadata size limit you need?

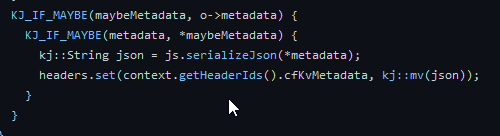

return values when listingI don't think that fits with KV's operational model at this time unfortunately. You can hide the value within the metadata though. One thing I do see a lot of is people putting JSON.stringify(metadata) into the metadata field. Don't do that. You can just give the

metadata a JS object which saves on double-encoding.What exactly does

metadata do with the input behind the scenes? The types are any | null so I can understand confusion from usersIIRC it just calls JSON.stringify on it

What's the metadata size limit you need?As large as possible. The point is to use metadata instead of value to store the data which allows calling

list() to retrieve many entries at a time.

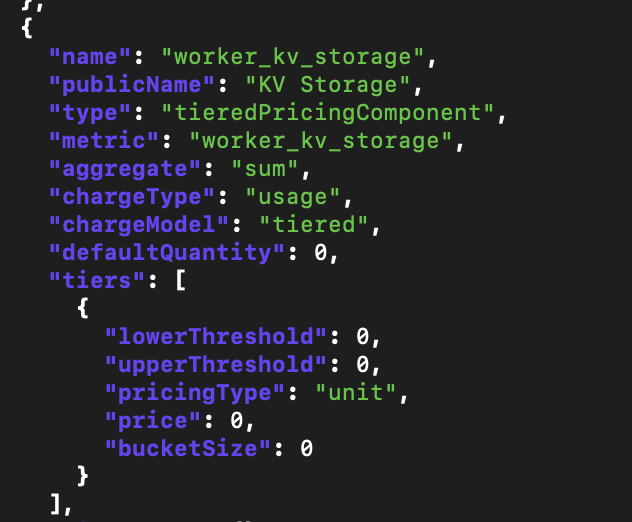

Current limit is 1024 bytes. Raising it to 8 or 16 KiB would do a lot.Ok. I’ll keep noodling on it to think about what we can change to enable that

I nominate @albertsp as the first victim errr... tribute gracious volunteer. Can I get a thumbs up if that sounds like a good plan?

A risky move

Are you sure that you dare? 👀 Alright then 👍

Not even 100% sure what I said yes to so I guess you can do whatever to my account 🙃

This is a dedicated namespace yeah? I haven't landed a double-billing fix but I assume you don't have any meaningful amounts of traffic on that namespace, yeah?

It is a dedicated namespace, yeah.

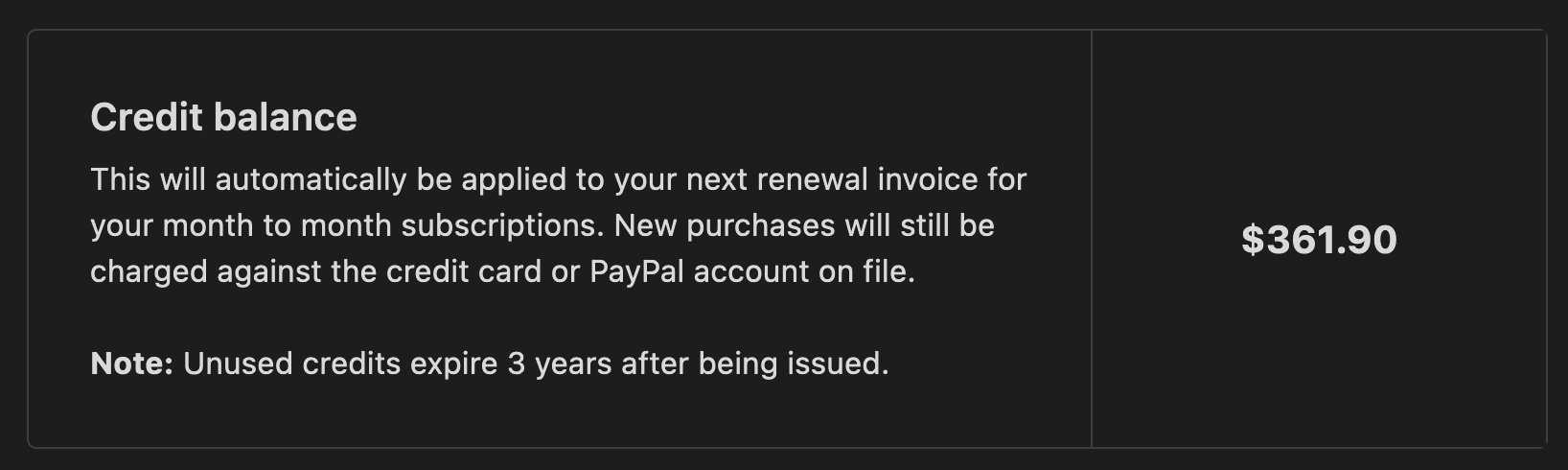

I also have Workers Nocost so billing should not be an issue 😛

That doesn't apply to storage products

Are you sure? The rate plan states the unit price is zero for all KV 🤔

I'm not. I'll trust you more here. I have 0 insight into how KV does billing. We'll be overhauling it in Q2/Q3 and I'll double-check if we want Workers Nocost to continue to also 0-rate KV (product question, not engineering).

Will take me about ~15 minutes on my side. If we've done everything right, you shouldn't really see any functional change on your end.

Workers nocost does indeed give zero rated KV storage right now, and afaik iits used by internal teams so changing it would be a big change and indeed requires wider discussion

Great, will prepare some tests! Credits should catch any over usage 🙂

Taking a bit longer as it turns out we didn't have enroll by namespace only.

Anyone else have 0-rated accounts + dedicated test namespace?

I dooo 😀

522ed7942a6ec29727e101623a48ea76I'll take one if youre offering, just filled the form

SGT

Me too if you're still offering, I filled out the form, acct id

b9e510810e1e12fcd40826d266a1fb12, namespace:

a4ca8235e436469b82bf6f2659640c32My details are on the beta signup form

@chaika.me you listed 3 namespaces in your submission. Did you want me to start with just a4ca8235e436469b82bf6f2659640c32 or all 3?

@walshydev same for you - do you want both namespaces that you provided enrolled?

Yes please

Ah sorry for the confusion, if you can do all 3 it would be great. If it's extra work or makes things more complex, just that one would also be fine

No skin off my back. I'll action it on all 3. I can do it as an % of traffic by account or namespace. Unless told otherwise I'm just starting all the NO_COST people with a test namespace at 100%

I believe I included 2 namespaces in my form submission, specifically newly created ones for testing. Lemme know if that works 🙂

That's fine

The following namespaces are now at 100%:

@cherryjimbothe 2 namespaces you submitted

@chaika.me the 3 namespaces you submitted

@walshydev the 2 namespaces you submitted

@Erisa | Out of office the 1 namespace you submitted.

If anyone could make some sample requests to their namespace, I just want to make sure I hooked everything up properly.

Grrr... it looks down?

Well, at least I hooked it up right. Going to take it down until I figure out what's going on.

Actually, does anyone care if these namespaces aren't working?

Mine is just a general test one + one I made specifically for this so all good my end

Yeah I don’t mind personally - that’s why I created new ones for testing. Do what you gotta do 😀

Yep no worries, made those for testing. Interesting though, one of the namespaces looks dead dead (no uploading or reading) but another one I can read from but can't write to

Working through deploying a fix. Just juggling a few things at once.

Going to take ~3 min for CI to deploy the fix. Will update if it looks like it's working

Looks like it's alive again <:blobhaj_ghostie_alive:1028186714973409300>

Yup. Looks live. What happened was that I thought a bug had been fixed so I'd removed the workaround. But it looks like the bug is still there so I just put the workaround back. Sorry about that

How's it looking from your end? Any interesting comparisons?

Few small tests around timings:

3 writes: 924ms, 862ms, 1396ms

- This feels like more than v1 but I'd need to run against v1

Cold read: 919ms

Hot reads: 119ms, 44ms, 46ms, 18ms, 129ms (all reading the same key)

- and a bunch more, generally sitting ~50ms hitting LHR (https://paste.walshy.dev/PzhHmMfHgm2d)

(all taken within the Worker so not the most accurate, will need to test with more accurate timings tomorrow)

Same performance as V1 is actually a great starting point.

Cold reads in a different in-region colo should be faster provided the key was already fetched. Truly cold reads for the first time are probably going to be slower unless we have a bug (yes - that's right, it's a bug in v2 if truly cold reads aren't slower).

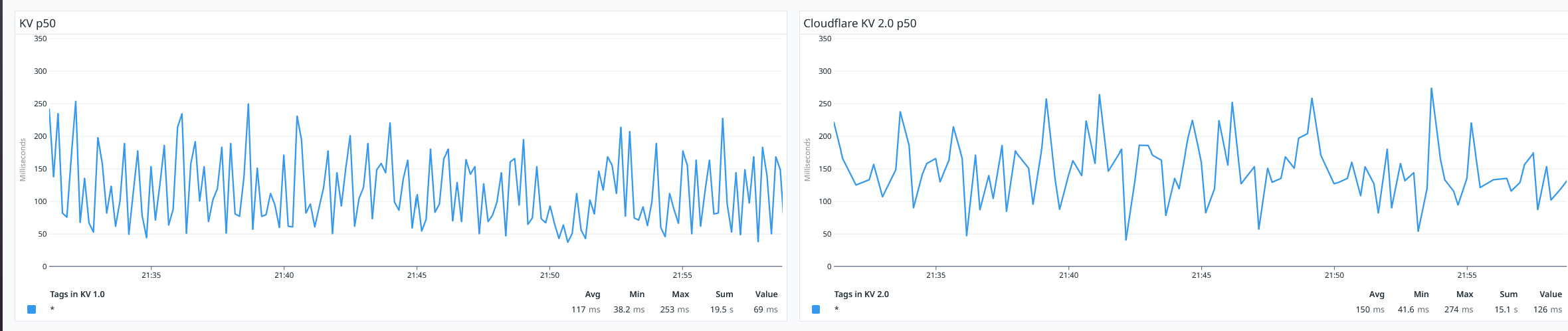

Hot reads are consistently faster on v1: https://paste.walshy.dev/r3dGH9jRprDL

(again timings in Worker and just spamming same key to same MCP colo)

v2 likes to sit ~50ms while v1 likes to sit ~10-20ms

KK. Send me a trace internally

fwiw I'm seeing the same, these tests are from DataDog at all of their locations every 30s, against a simple worker fetching from KV, tests are configured the same and only things using the Namespaces

(Not the most scientific tests but interesting anyway, hopefully will improve)

Let's not speculate on why it's expected to be slower. It's not what you think and it's not something we can share publicly

Oh ok

Ok. So my hunch is that we just don't have enough volume so there's a lot of cold starts for the scripts. Because this is not an eyeball script, we don't know to prewarm the script during SNI which is how cold starts normally are eliminated. As we shift more traffic (in aggregate), performance will improve. From the looks of it, V2 is only about ~8ms slower in practice if you add enough volume.

Looking deeper, P90 is within 1ms of V1. P95 and P99 look worse. We'll need more aggregate traffic overall before we start comparing tails though. It could all be a slow script artifact

Oh. Something you can do in KV 2.0 that doesn't work in KV1.0. Set a very large cacheTtl and notice how writes from anywhere in the world are picked up within 1 minute.

And if you ask nicely, we can tweak a knob on your enrollment so that consistency is extremely low. Like writes are noticed within a few seconds. We're not sure yet though how we'll expose that and whether we'll up-charge for lower values and whether we'll change the default of 1 minute. We'll have a better sense once we scale this up

This is very exciting 👀

I'm not a prolific tweeter. First tweet in a few years https://twitter.com/vlovich/status/1634040034084159490

Your account is private 😅

Yeah just realized. Not sure how to change

Is there a way to change the audience for a specific tweet?

Not to my knowledge

How about now?

Looks good!

Oh nice that's super cool

Yeah. I think that's the killer thing that's been hampering KV for a while. KV 1.0 would probabilistic refresh based on the cacheTTL. We could have tweaked it for KV 1.0 to achieve a similar effect, but we decided to focus our energy on KV 2.0 where the things it's doing mean that we can enable this more aggressively without worrying about being one misbehaving customer away from the service going down. In KV 1.0 it was opt-in and we had to approve your use-case / load. In KV 2.0 I think we can make this self-serve eventually (mostly just plumbing things through).

what happens if you set the ttl to like 5? will writes be available globally in 5 sec?

@beelzabub I filled in the form btw

cache TTL minimum is unchanged - it must at least be 60s. However that doesn’t control the refresh rate. We can set that to a small value so that we get availability within seconds. What’s really cool is you can set a really long TTL and lower the refresh rate. That way you get a super fast TTFB always at the eyeball and a write would become available within seconds (you’d get a minor hiccup for now as the asset is refreshed from the origin)

There’s no current mechanism to control the refresh rate but we can override a default for now on your namespace. I am interested in people trying that out. I can’t guarantee they’ll get to keep that superpower but open to experimenting

Awesome. I would not mind paying 10 times more for kv reads and writes if refresh rate would be under 10 seconds...

@albertsp reported a problem with cross-region consistency after a write. Hopefully the scenario just repros in our test suite. Should hopefully only take a few days but that's the blocker for enrolling anyone else for now.

hopefully that problem will be solved soon 🙂

Ok. We managed to land a change to fix the reason we weren't getting debug logs into our logging infra internally. I've done a preliminary spelunking on the logs and there's pretty clear evidence of what's going wrong - now it's just a matter of finding and fixing the issue (& figuring out why 2 independently written e2e tests failed to repro this). Also caught another bug in the integration of the new caching layer within KV where cacheTtl was always 60 regardless of what you asked but that one is obvious as to what the problem is.

I just asked about choosing between KV and DO in the help channel, seems like the solution is going to be cache in front of KV 😬 https://discord.com/channels/595317990191398933/1085543608154853408/1085565538849263636

I mean for now as a cost reduction sure. It will remove the benefits you can get from KV2 in terms of faster consistency

But to be clear. I can't promise we will change the pricing. Just that I am going to take a serious look at it next quarter and see if we can build a compelling case for changing it because it does feel like an unnecessary sharp edge to using the product.

Thank you friend!!! We appreciate your hard work and you advocating for us!

Unknown User•3y ago

Message Not Public

Sign In & Join Server To View

Great job @matt_d8380! We plan on ramping up the rest of the sign up list gradually starting at 1pm PST. Please let us know in this thread if you’re observing any issues.

Just wanted to share an update that everyone that had been signed up is now at 100% as per the signup request.

Restricted by namespaces: @cherryjimbo @albertsp @walshydev @Erisa | Out of office @ziga.zajc007 @willow_ghost @cboskipper @advany

Full account: @kian @zegevlier @skye_31 @Isaac McFadyen | YYZ01 @unsmart @sebastiaanyn @itsmatteomanf svg (doesn't seem to be in this room), @fritexhr @Dani Foldi @sam_the_dev @spongebhav @declan137

Please let me know if you're seeing any issues

Unknown User•3y ago

Message Not Public

Sign In & Join Server To View

seeing significant speed improvement in READ operation!! thanks so much

Unknown User•3y ago

Message Not Public

Sign In & Join Server To View

"supposed to" and "practical to do so" are different things - if you list 1k entries, you can't iterate over them without going through a binding/subrequest

Unknown User•3y ago

Message Not Public

Sign In & Join Server To View

Yeah. It's on my radar to lift the metadata size. I don't have a sense of when we'll get to it. My brain space is occupied with KV2 at the moment and that's eating up the rest of the year's bandwidth. I will probe a little bit to see if it's just a small knob we can tweak.

And to respond to storing the value in the metadata, that's a perfectly valid design choice. I will note that KV2 does nothing for

list operations. So all the worst case consistency behavior and performance issues for KV1 today will remain.Unknown User•3y ago

Message Not Public

Sign In & Join Server To View

It's the same as kv1 types and docs wise

At least I'm pretty sure all the changes are just improvements to the backend and no actual breaking changes in any way that need an update. It is faster consistency though so put a ttl over 60s for hot reads that are more consistent than kv1

Unknown User•3y ago

Message Not Public

Sign In & Join Server To View

Just to be careful about naming since I was a little loose. We're not actually going to call it KV2. There's no changes. But I need some way to talk about how we've set up a new foundational layer for KV to take it into the future.

We have an internal name for the transparent caching layer we built that is this foundational layer, but not sure if that's going to stick when we start talking about it externally so I don't want to mention it here (stay tuned to the blog post).

BTW the vast majority of customers should be using this now so enjoy. You should be seeing writes world wide within ~1 minute even with a cacheTTL of 1 day

Would there be any downsides now to setting really high ttls? If not and its just free performance, will the default ttl ever be changed?

I had a panic moment and we ramped back down to 0. We'll go back 100% back soon.

The default TTL can't be changed because we have people storing security tokens on us and changing the default would break those applications. I wish the default had been otherwise.

I can't wait for the blog post 😄

will you also mention how and if we can get access to faster refreshes then 1 minute?

filled in the form ❤️

That's for later this year unless you have an ENT contract in which case reach out to @charlieburnett

Currenlty using upstarsh redis. But good to know its coming this year to non ent

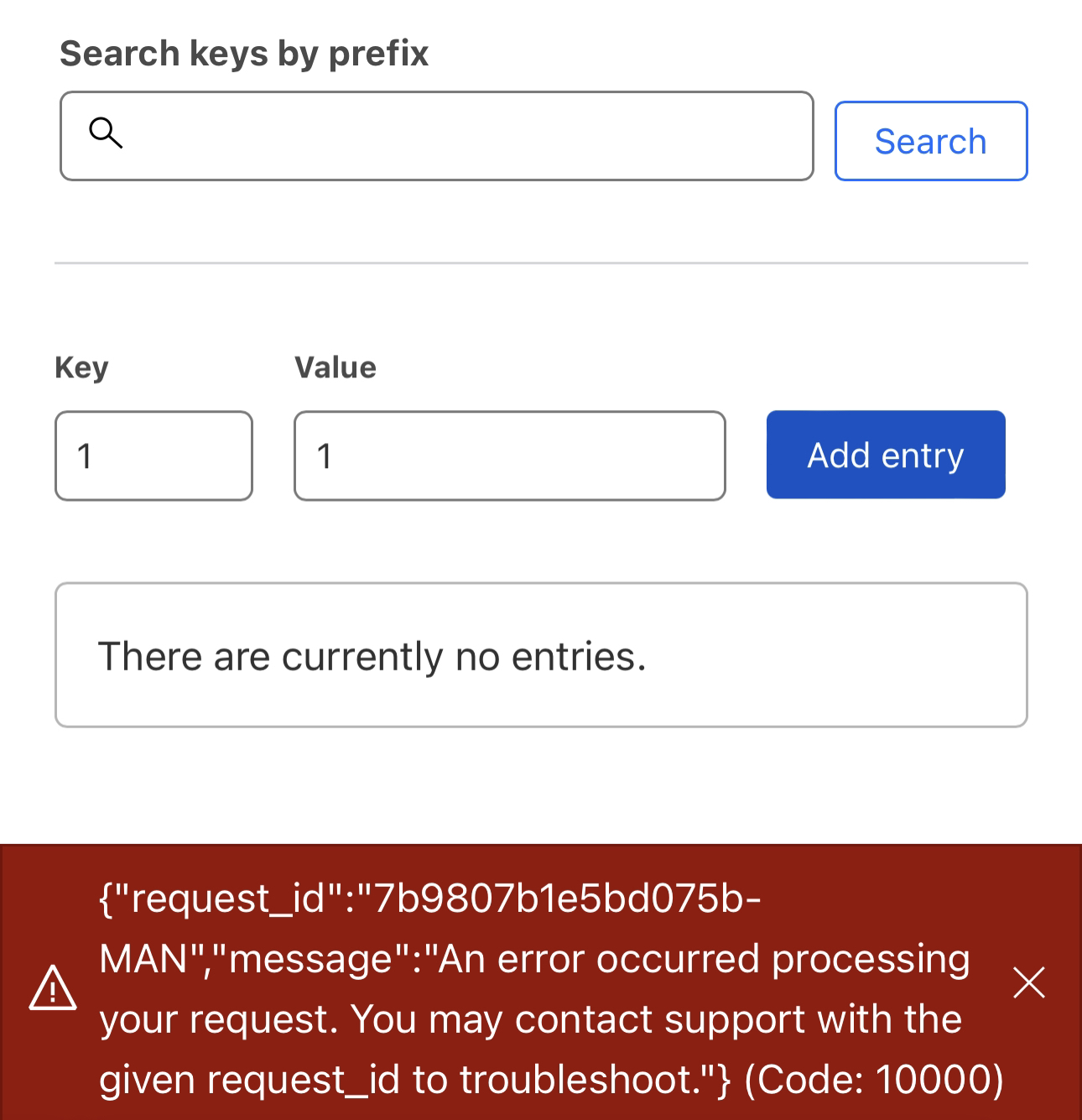

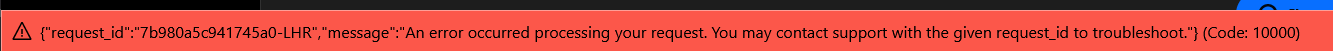

I am part of 2.0 Beta, something happened to one of the KV namespace I have, its values are wiped out and now getting this error on put "KV PUT failed: 404 Not Found".

namespace id is correct.

just noticed, i have 6 namespaces, all of them are empty!

4801c8eb665defe9587a6e4376e60f0f

You're getting a 404 on PUT?

@robsutter @beelzabub

yes

"KV PUT failed: 404 Not Found"

Same

Namespace is showing as empty and unable to add entries

This a v2 namespace?

Pinged internally

Not sure if there’s any external way for me to verify but all of my account was opted in by Vitali

the one i put in the form is busted (4f924da20a514c67843c79085c9a8ed0)

but the others seem to be fine

Is it still broken right now?

Still is for me

Looks happy now

Plus my old entry shows up

same for me. no data

4f924da20a514c67843c79085c9a8ed0 isnt erroring on put anymore

Whats the namespace ID?same

Whats your namespace IDs?

8471b96a59374cfea7e85506d93feed2put is good for me now. no data!

ID?

@beelzabub^some with data not showing up

It is possible I deleted my data but I dont think I did 🤔

Unlikely to be deleted

I think it got a 404 from an internal layer which it's caching for longer than it should be. 1 sec

Data seems back for me...

Ok, maybe not fully...

no data for me. still

account id: 4801c8eb665defe9587a6e4376e60f0f

Oh List contains my data but a get request is 404 so the dash doesnt show it

how do we opt out of BETA?

If that's even possible, they'd have to do so in the backend.

It's disabled.

Should be working now

Yeah, data is back

The issues seems to have started ~70 minutes ago, @beelzabub 🙂

If you need that info

thanks @beelzabub. I can access the data now.

Btw was KV2 not released to everyone? I got that impression from https://discord.com/channels/595317990191398933/1078758016440082562/1096138513495367740

But I find it really odd to only see 1 report of issues in here if it was released to everyone 😅

Unknown User•3y ago

Message Not Public

Sign In & Join Server To View

I am yes, @cherryjimbo is still getting a 404 on one of his

Unknown User•3y ago

Message Not Public

Sign In & Join Server To View

yeah I'm still seeing a 404 getting a key in namespace ID

c9ce0263ffc749bca465c4be042b6be0Unknown User•3y ago

Message Not Public

Sign In & Join Server To View

I see it in the

keys results though - it's there, I just can't fetch it

previousUnknown User•3y ago

Message Not Public

Sign In & Join Server To View

let me check

-

https://api.cloudflare.com/client/v4/accounts/522ed7942a6ec29727e101623a48ea76/storage/kv/namespaces/c9ce0263ffc749bca465c4be042b6be0/keys lists it fine

- https://api.cloudflare.com/client/v4/accounts/522ed7942a6ec29727e101623a48ea76/storage/kv/namespaces/c9ce0263ffc749bca465c4be042b6be0/values/results 404s (key name is results)

I can notUnknown User•3y ago

Message Not Public

Sign In & Join Server To View

Yeah it's populated hourly via a cron worker anyways, so the next write might fix it?

Unknown User•3y ago

Message Not Public

Sign In & Join Server To View

actually looks like it's just kicked back into action - I can GET it now

Unknown User•3y ago

Message Not Public

Sign In & Join Server To View

yep all seems good now on my end, thanks

Unknown User•3y ago

Message Not Public

Sign In & Join Server To View

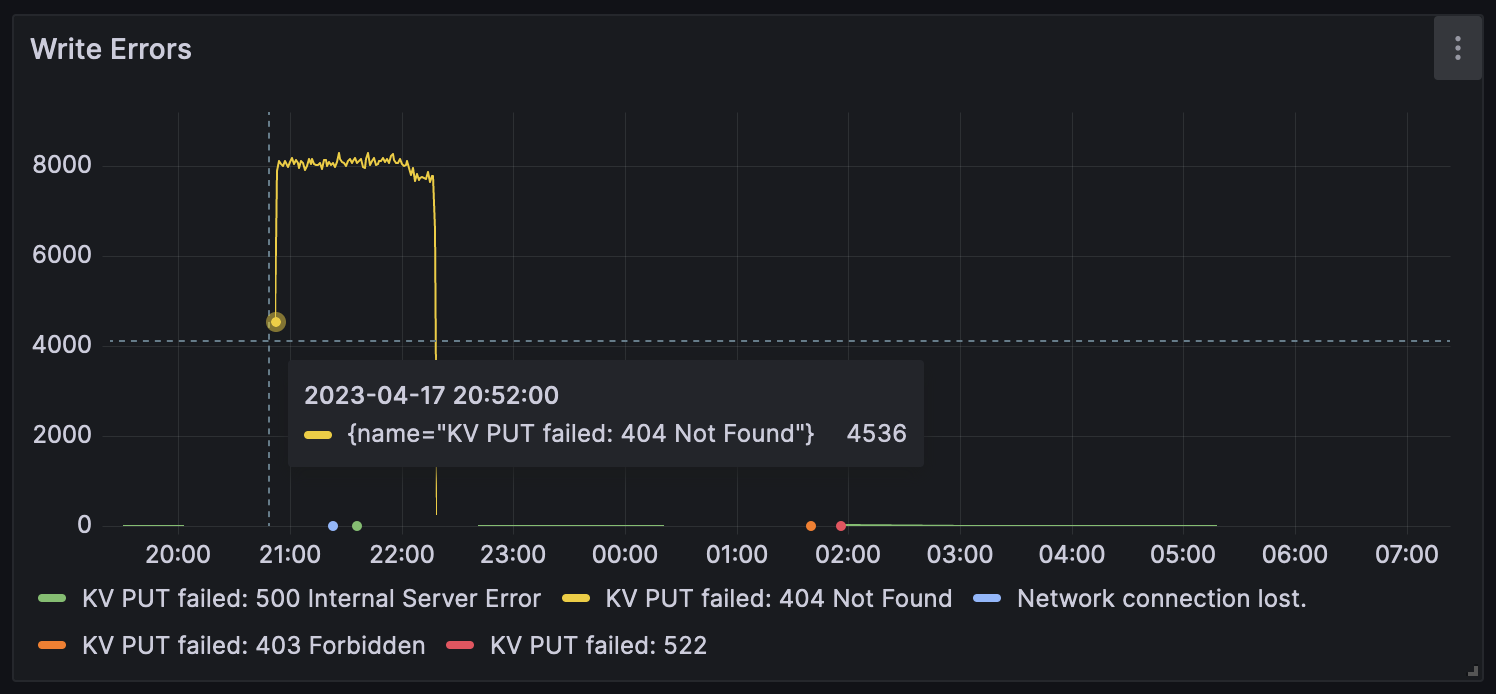

Specifically we ramped down to 0 for almost everyone but that early sign up list due to an issue we identified over the weekend. Then in trying to fix that issue, we did an accidental out-of-order deployment which negatively impacted the remaining customers.

We're exploring what kind of controls we can put in place to prevent this kind of failure mode again.

Sincerest apologies for the disruption. Not where we set the quality bar for ourselves.

Alerts on my Grafana dash would have caught this early. Or not since I was already asleep when this began.

(Timestamps are in UTC)

Unknown User•2y ago

Message Not Public

Sign In & Join Server To View