SGLang DeepSeek-V3-0324

I have been trying to run Deepseek-V3-0324 using instant clusters with 2 x (8 x H100s) and have so far been unsuccessful. I am trying to get the model to run multi-node + multi-gpu.

I have downloaded the model from Huggingface onto a persistent and attach the persistent volume to my instant cluster before launching. After launching, I then run the Pytorch demo script as presented in https://docs.runpod.io/instant-clusters/pytorch to make sure that the network is working (it does).

I then follow the instructions to get Deepseek-V3-0324 running according to: https://github.com/sgl-project/sglang/tree/main/benchmark/deepseek_v3

Instead of following the absolute default instructions and doing:

In its place, I run the following command on each node:

The issue is that this hangs. I check nvidia-smi to see the model loading and it only ever loads each GPU up to almost 1GB before it goes up no further.

Any help would be greatly appreciated.

Deploy with PyTorch | RunPod Documentation

Learn how to deploy an Instant Cluster and run a multi-node process using PyTorch.

GitHub

sglang/benchmark/deepseek_v3 at main · sgl-project/sglang

SGLang is a fast serving framework for large language models and vision language models. - sgl-project/sglang

133 Replies

Before discussing this problem

Does a 600+ parameter fp16 model fit in 16xH100s?

With reasonable context length?

Or is it fp8?

Idk

Anyways why are you doing tensor parallel over network

They're using the instant cluster so it should work

Let me try and see how to run it on instant cluster, if it works I'll update here

launch_server.py: error: argument --nnodes: invalid int value: '${NUM_NODES}'

hmm i get this for eveery environment variable, including the world size one

im putting the command in the CMD immediately without going into web terminal

im using the lmsysorg/sglang:latest

yeah can you try try the 4bit first, and see ifit works? or try more gpu vram

Its tensor parallel tho

Over network you should pipeline parallel

Wow u r rich lol

Apparently ots fp8 in the official repo

So it should work

Tensor Parallelism - NADDOD Blog

Tensor parallelism alleviates memory issues in large-scale training. RoCE enables efficient communication for GPU tensor parallelism, accelerating computations.

Maybe becuz its cmd

Try bash -c 'command'

Yeah well not for an hour just testing

Yeah, I just want to run this thing. I'm happy to spend on GPUs for a period of time to get it running. But I can't even get the basics to work unfortunately... has anyone seen any example on any infrastructure setup of this working multi-node / pipeline parallelism? If not on RunPod than anywhere else? It seems that no one has got this running anywhere.

i haven't tried running anything via network honestly, and im interested in this too 🙂

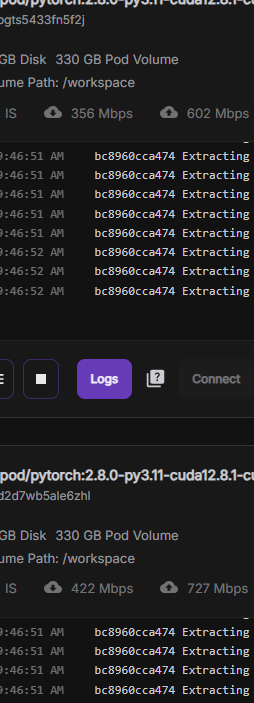

is this a normal expectation for cluster's network speed?

bgts5433fn5f2j

d2d7wb5ale6zhl

i feel like its abit too slow

oh and also riverfog, i've tried your rcommendation, bash -c works! it reads the env correctly.

also the other recommendation tp 8 when using total 8*2 gpus (16 total) will use only 8 gpus i guess, it doesnt load so i cannot know too, but when i try it some gpu vram usage are stuck at 0, some at 2% some 1%

when using tp 16, all gpus are sttuck between 1% and 2%

@frogsbody

Escalated To Zendesk

The thread has been escalated to Zendesk!

maybe try opening this

i think sglang doesnt support pp, only tp

Yes, I have exactly this issue.

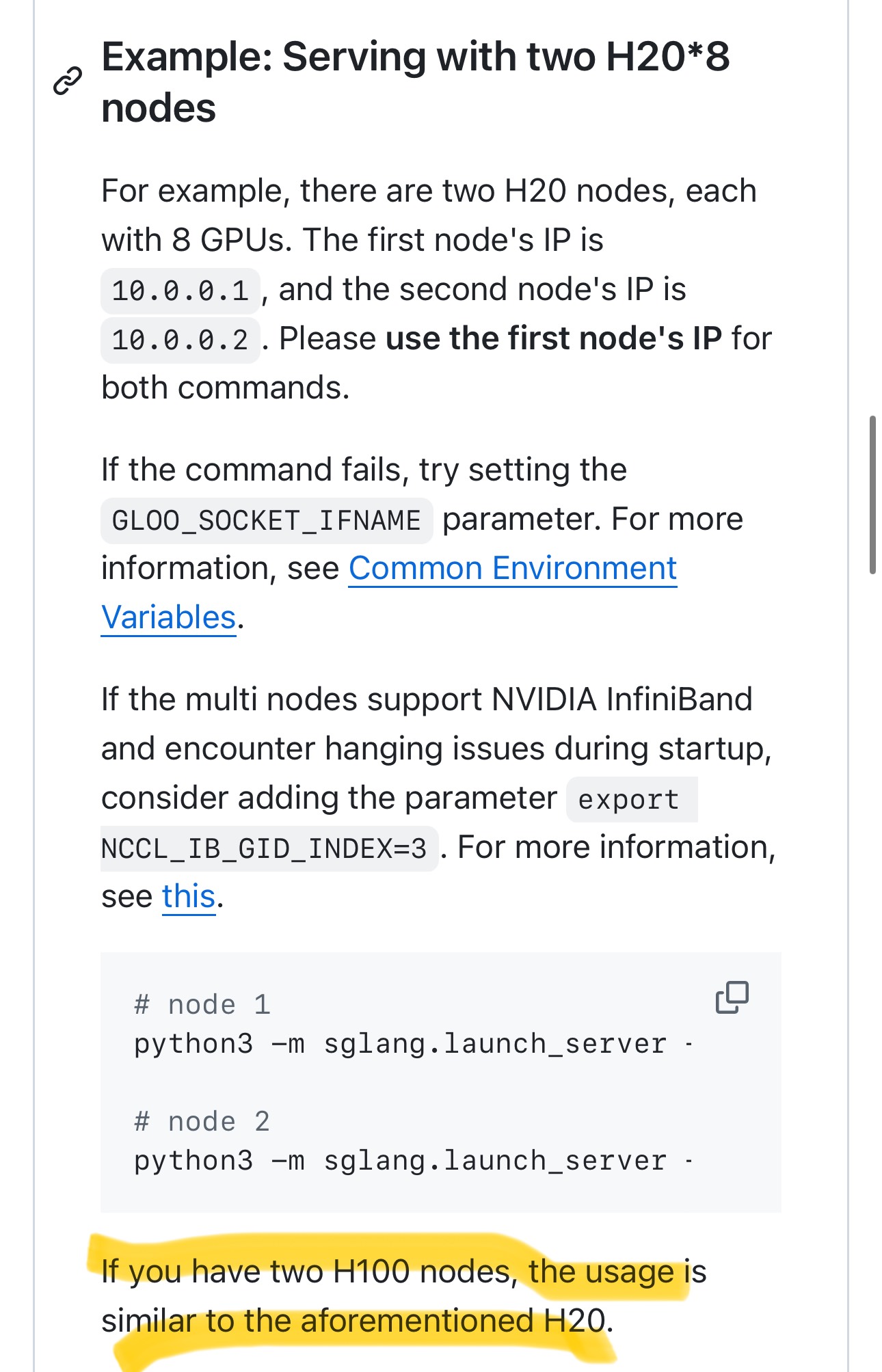

They claim to support pipeline parallelism:

GitHub

sglang/benchmark/deepseek_v3 at main · sgl-project/sglang

SGLang is a fast serving framework for large language models and vision language models. - sgl-project/sglang

I dont see the pipeline pararellism here

isnt it supposed to be a configuration arguments?

nnodes = 2

It’s a torchrun argument that gets passed through to the equivalent in SGLang I believe.i see

yeah then it might use both

did you open a ticket?

i will try vllm in the future maybe its better, id recommend you to try it too

@Jason have you tried vLLM with instant clusters? I believe the communication mechanism under the hood doesn't work with the way that Runpod sets up inter-node communication. I couldn't get it to work (this was a few weeks ago when it was still in beta though).

I wasn't sure where to open a ticket because I'm not sure where the error is really coming from... I think it's an SGLang issue but I wasn't clear.

are you still here?

ive never used sglang but

vllm pipeline parallel works well with multi node

even with not. that good network bandwidth

I'm still trying @riverfog7. Have you tested vLLM with Instant Cluster or do you have another solution where I can test multi-gpu in the cloud to run this?

@frogsbody but do you really need multigpu?

Yeah, I specifically need to test tensor parallelism and pipeline parallelism: 2 nodes of 8 x H100

i mean

you can host the same model in 1 node

you want deepseek v3 at fp8

right?

My requirements are to run Deepseek-V3-0324 over two nodes by whatever means - I just have to see that pipeline and tensor parallelism can work for the model

okay

is sglang required too?

It's less about actually using it - more about showing it can work

No, it can be anything

vLLM would be fine too

vllm should work

ive done it in the past

Have you got that working in Runpod Instant Clusters?

not with 2x8

but that doesnt matter

no in AWS

should work. with runpod tho

I had trouble running the basic torchrun script:

https://docs.runpod.io/instant-clusters/pytorch

Deploy with PyTorch | RunPod Documentation

Learn how to deploy an Instant Cluster and run a multi-node process using PyTorch.

It failed to run it when I tried with VLLM

Yeah, I tried AWS but they wouldn't give me any GPUs so now just trying with runpod

I will try vllm again

can you ping the other pod

Yeah I can ping it

with ip

It's something to do with Ray, which vLLM uses under the hood

do u use vllm docker image

or sth else?

I was using something else - but I can use that docker image

i suceededd with the docker image

soo

Thanks for letting me know, I'll try that out and let you know how it goes

@frogsbody https://docs.vllm.ai/en/latest/serving/distributed_serving.html

here's the multinode docs

GitHub

vllm/examples/online_serving/run_cluster.sh at main · vllm-project...

A high-throughput and memory-efficient inference and serving engine for LLMs - vllm-project/vllm

the cluster making script

docker run \

--entrypoint /bin/bash \

--network host \

--name node \

--shm-size 10.24g \

--gpus all \

-v "${PATH_TO_HF_HOME}:/root/.cache/huggingface" \

"${ADDITIONAL_ARGS[@]}" \

"${DOCKER_IMAGE}" -c "${RAY_START_CMD}"

this is the docker run command so

you can modify this and run it

Where do I run this? When I create the pod?

no so waht the docs says is

you have two physical machines

then you create a ray container on both physical machines and make a cluster.

but in your case you have no access to physical machines

Yeah, the issue I had is that runpod doesn't have that

I have to work within those bounds

I don't have AWS or anything to work with

so you should translate the docker run command to runpod's template

docker run --entrypoint /bin/bash --network host --name node --shm-size 10.24g --gpus all -v /path/to/the/huggingface/home/in/this/node:/root/.cache/huggingface -e VLLM_HOST_IP=ip_of_this_node vllm/vllm-openai -c ray start --block --address=ip_of_head_node:6379

Yeah, this was my next idea - I just have to figure out how to modify runpod to work with this since "docker" can't be run inside of a pod once I start it

It has to be part of a template or something. I'm pretty new to this side of Runpod.

should be

image name: vllm/vllm-openai

CMD: python3 -m vllm.entrypoints.openai.api_server -c ray start --block --address=ip_of_head_node:6379

mount nw volume to ~/.cache/

for the worker

image name: vllm/vllm-openai

CMD: python3 -m vllm.entrypoints.openai.api_server -c ray start --block --head --port=6379

env: VLLM_HOST_IP=ip_of_this_node

for the head

Are you starting these as two separate pods or using Instant Cluster?

In this case it looks like you're using two separate pods with global networking or something

can you apply diff images

Not with instant cluster

for the two pods in clusters?

or diff setting at least

Doesn't look likeit

lol

should we write a script?

its solvable

Yeah I'd love to lol, been trying to run DeepSeek V3 across two nodes for a while now

vllm serve /path/to/the/model/in/the/container \

--tensor-parallel-size 8 \

--pipeline-parallel-size 2

I was thinking this should just work

If I spin up a cluster

And go into each node and run this

And make sure to pass in the right information for the host...

But then it fails because of Ray

yeah but we need a script

to build the ray cluster first

it should run INSIDE a ray cluster

Yeah, I feel like that's outside the default scope of instant clusters. Are you suggesting we set up a ray cluster inside of our non-ray cluster?

that's what i meant

😄

the writing a script part was for that

That would be cool... solve a lot of problems lol

ill try with global networking first

just to see if it works

I'll try form a ray cluster in Instant Cluster again

try

python3 -m vllm.entrypoints.openai.api_server -c ray start --block --address=ip_of_head_node:6379

this inside a vllm container

Sure will try now

so you have seperate ssh access to

the two nodes right?

Yes

good

Will send sc in a sec

api_server.py: error: argument --block-size: expected one argument

isnt it --block?

I copied what you sent and that's what it gave me

In my case:

python3 -m vllm.entrypoints.openai.api_server -c ray start --block --address=ip_of_head_node:6379

this?

Yeah, that's what I did ^

hnm

vllm/vllm-openai

the image is this

Yep

i think il ltest in mine first

wait a sec

oh

it was

just

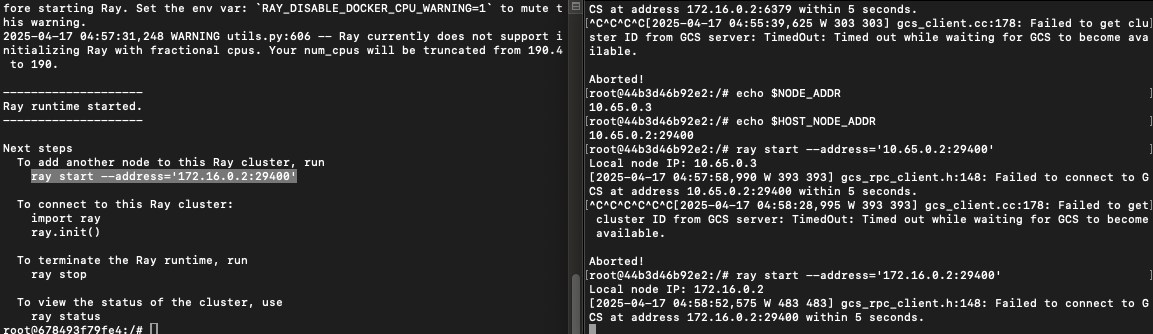

ray start --block --address=10.65.0.2:6379

or bash -c "ray start ...."

Yeah, I'm doing that right now actually

didnt see the --entrypoint /bin/bash

But can't get the worker to connect

xD

no ihavent

wdym?

any errors or logs?

Tried 6379 and couldn't get that to work

thats the port from env?

So tried a port I knew was exposed 29400 since I can ping between the nodes with that

ray start --block --address=10.65.0.2:6379

this?

This works

is adding --block

make a diff?

But connecting from worker doesn't

Let me try with block

ray start --block --head --port=6379

for the head

ray start --block --address=10.65.0.2:6379

for the worker

maybe it doesnt work bc u already started a vllm process in the start command

I actually haven't started anything in this one

I am not using the vLLM image this time. I started a new cluster that doesn't have vLLM. I pip installed it.

Regardless, Ray should work independently

yeah

same thought

but had nothing to blame other than that

check

ufw

just in case

What is UFW?

and other firewalls too

ubuntu firewall

Ah okay let me check

that was the problem in my last attempt

@frogsbody i have one question

Trying to check but have to install packages

@riverfog7 yeah what's up?

why does the first pic say 172.xx

but second pic says10.60.sth

That's the "master address"

master addr?

Overview | RunPod Documentation

Instant Clusters enable high-performance computing across multiple GPUs with high-speed networking capabilities.

NODE_ADDR is the address of the individual node

That's the one that ray uses

vLLM uses Ray under the hood and it isn't playing nicely

That's why I was hoping SGLang would work since it uses pytorch

But then we have that weird bug where it hangs model loading at 1% lol

I suspect that it's actually only loading the pytorch stuff

And never actually loads any of the weights in

maybe it binds to the wrong nic?

We use eth1 I think here

and recieves from the public ip

but not from private ip

Issue is that I'm not sure if that's something we can even fix under the hood with vLLM... I just don't know enough about how vLLM works

if ray works vllm works

And vLLM uses that same ray cluster?

yeah

Hmm

you can just use the ray cluster

as one computer

vllm does the finicky things by itself

I actually can't even ping between each machine now

maybe ray start --block --head --port 6379 --node-ip-address 10.65.0.2

in the head?

wut

can u just ping it

ping 10.sth

ufw status?

Interesting

My environment is messed up now

I can't run the default torch script here

Deploy with PyTorch | RunPod Documentation

Learn how to deploy an Instant Cluster and run a multi-node process using PyTorch.

lol

So I messed something up with whatever we tried

hmm

maybe start with a fresh pytorch image

I'

and install everything (ray and vllm)

I'm going to have to refund this account lol, it won't let me start another pod

Not enough money in the account lol

I may sleep for a bit and get back to this, interseting problem to solve

great

the community says

--node-ip-address providing this should make it bind to the proper address

so maybe try that next time

in the head node

Yeah will do, I'll post any findings here