aws lambda + drizzle orm help me please

Hi, im trying to learn a way to create mi api on api gateway + lambdas with drizzle ORM, currently everything works connected to a supabase postgres db but i cant manage to deploy my lambdas to aws :(. I've been trying so hard with serverless framework but it doesnt deploy the lambda handler, node_modules nor anything, just uploades empty package.jsons and handler.js & handler.js.map with a lot of boilerplate code (10000 lines).

40 Replies

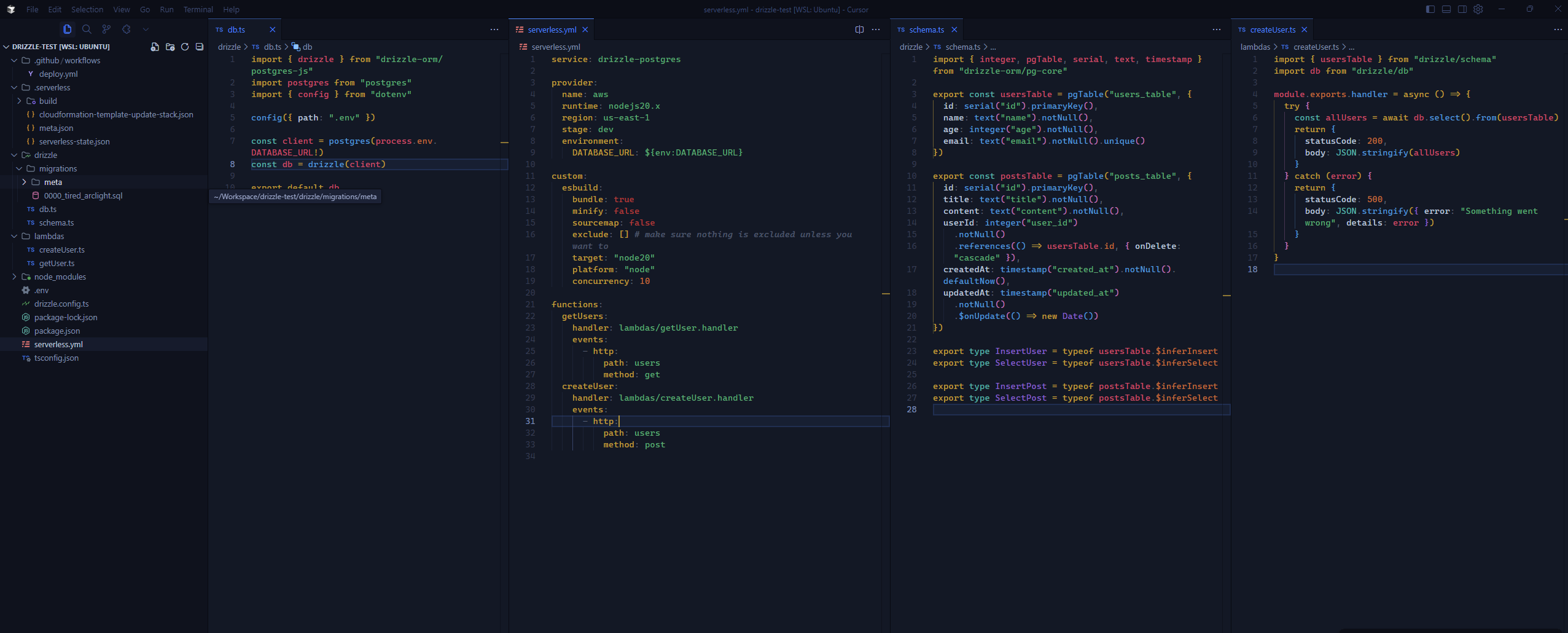

Im going to provide a little bit more of insight of my project structure:

Everything deploys "without error"

i realized it works it just uploads that files instead of the actual source code

Did you solve it then?

Btw in case you didn't know you might want to check out sst for a great alternative to serverless framework

"kind of" solved, as it still is not to the extent id like it to work. Im looking for different ways to do that, right now i was heading to sst but was in the middle of searching ways to manage an api with api gateway and lambdas in a repository, and pushing them to update the resource on AWS

currently only have been videos and stuff about pushing just one Lambda with gh actions but i kind of want a full repo with lambdas being able to update, and also working with drizzle orm

so maybe SST Is the path

Sst or aws cdk. Sst is probably simpler if you’re only deploying an api gateway and db but I’m a big cdk fan if you’re getting more complicated

i have a question regarding SST or cdk tho, when i deploy my lambdas does the handler represent what Is in the sourcecode? or it's a bundle of the functional code?

like in serverless framework i end up having my sourcecode on the repository but on the lambda in aws consoles the handler Is different and can't be understood

Your typescript code as written is not actually runnable on node. Its being compiled and minified. You can disable minification and enable sourcemaps in your SST configuration to make it more readable. I would also recommend developing locally with sls-offline or another tool instead of deploying to AWS every time.

thank you for the response!, im recently starting to learn about this kind of things so my mind is full of missdirected information so i might cross terms when trying to ask :(, but id like to have a mental image about this:

Can i manage to get a repository with all my lambdas (working with drizzle in this case), and being able to develop them INDIVIDUALLY given its sourcecode on the branch? or if i push to develop it would update all my lambdas because the pipeline would run over everything? Also, about developing offline with sst, that does mean for example that im going to use the "offline" api routes given by sst on my application instead of the "deployed" ones right?

there's kinda 2 paths: live lambda or serverless offline, i'll direct you to the docs on the nuanced differences. Since you're just using api gateway, sls offline might make it conceptually a little easier to work with.

https://v2.sst.dev/live-lambda-development#how-live-lambda-is-different

https://www.serverless.com/plugins/serverless-offline

Sls offline will not deploy anything anywhere. it runs in a terminal on your machine. On the other hand running sst dev with live lambda is going to replace all of the lambdas up on aws with stubs which are essentially proxies to your machine. If you use that, you're going to want a different environment on AWS for each developer to use

Serverless Offline - Serverless Framework: Plugins

Emulate AWS λ and API Gateway locally when developing your Serverless project

Live Lambda Development | SST v2

Live Lambda Development allows you to debug and test your Lambda functions locally.

thank u so much!, so in the case of using serverless framework, the flow of development would be setting up my lambdas in the repository, deploying them from the serverless cli command (that would create in this case said lambdas in aws) and from then for each lambda i want to update i just run again the deploy for serverless?

Im also confused because i've seen ways to do this but with github actions or codebuild, whereas i get my lambda in the repo and on push it updates the lambda in my aws console but i dont know if that can be done on a repository with a lot of lambdas (all the tutorials and stuff have been with one lambda), and each lambda having a common package (drizzle in this case). Also, for the last scenario, that would mean i have to create my API gateway by hand at console, or use another IaaC tool such as terraform or something else right? as serverless (or gh actions) is just lambda-deployment oriented

Really on a production situation you'd want to only be deploying from a pipeline. Pushing it up from your machine directly is liable to get yourself into trouble. You can just set it to run the deploy when you push to develop or master/main, then make PRs to those branches (get into the habit of working your changes in a branch off of develop, merging in that branch to develop once you've got your feature working, then merging develop into master/main)

Sst handles deploying multiple lambdas. You just add them to your yml file as different endpoints under the functions like you screenshotted above.

The development flow with sls offline is you start the server in your cli, it spins up all of your "lambdas" on some localhost port (e.g. its running only on your computer, just mimicking what it will be like on AWS), then you can run your webapp or whatever with that url as your base api url (or postman or just running curl against your localhost endpoints), then in production that api url should be set to your api gateway url and it will work the same, except its running on aws lambda instead of your pc. It automatically watches your changes, so you can make updates and call the endpoints to test it out.

I haven't used live lambda so I can't help you there. Presumably it keeps the same aws gateway endpoints and just proxies your requests down to your machine.

The key benefit of these tools is that your code can be shared. you can make a single module (like your drizzle client) and import it into each of your lambdas to use it. It's not really a separate package (package has a special connotation in nodejs land, would refer to something you can npm install etc with a package.json file). Each lambda is essentially just a bundled up version of your whole application with the entry function set to the function you specify in your serverless.yml file. SST just handles that packaging and the cloudformation updates when you deploy to set up the api gateway, lambdas, and other aws resources

I feel like im mixing terms, in this case when you refer to SST handling deploying multiple lambdas and development flow with sls offline you're refering to serverless framework and SST as separated right? sls as an "alternative" to sst?

I managed to make sls work the way i shown at first in the topic, and the way i managed for it to happen was to create my lambdas such as

createUser.ts and then when adding them to the serverless.yml and run "serverless deploy" it would create the lambda on AWS like in the picture. That way the resource is already uploaded in AWS and ready-to-use (i believe) if needed on development/production. The part where im not understanding is the actual flow of "things-to-do".

1. I create my lambdas with serverless framework, deploying them and deploying the API route

2. I can develop with sls offline, that gives me a localhost endpoint to test my lambdas on my application

3. I can deploy (and update the resource) of my lambdas with serverless framework? or do i need to implement github actions (and the way of zipping the lambda and pushing it with the arn) for this case so the lambdas are updated?

And for the last statement about the key benefit of these tools, it seems its kinda straight-forwarded but i really feel like im missing a piece of information to really wrap all those things around, srry for that, so id like to ask about the way that "bundling" and deployment works, with SST / serverless its all recursively for each lambda in the repository? as every video and things i've watched works only with one lambda zipping the src

im sorry if im not being clear, not native english speaker and also theres a lot of info in my mind and its kindof confusing 😦

I can see how serverless vs sst might be confusing, they dont make it easy.

sls offline isn't an alternative to SST, its a tool for developing locally to mimic/emulate what your code will do if you deployed to AWS. This is important because imagine you were working with a few other people, you can't all be deploying changes to the same AWS resources willy-nilly at the same time, they'd overwrite and you'd have a bad time.

Prerequisites to the following example (i'm writing this part after i wrote the list below):

Your github actions are set up to call sst deploy on new commits to develop

You followed the instructions to install serverless-offline and add it as a plugin to your serverless.yml file here: www.serverless.com/plugins/serverless-offline#installation

You don't actually need anything deployed to AWS to use sls offline. Lets say you wanted to add a new lambda for DeleteUser

1: Add the new function to serverless.yml under your existing functions

2: Make a new file at lambdas/deleteUser.ts

3: Add a function named handler in that file which calls your drizzle client to delete the user

4: run sls offline in your terminal, it should have a new endpoint for DELETE /users

5: iterate on your function definition, call your handler to test it

6: once you're satisfied with the updates, make a git branch named something like feature/delete-user and a commit your changes with a message like 'added user delete endpoint'

7: merge your branch into develop, github action will run and deploy to AWS

8: test your new endpoint using the API gateway url once the aws deploy has completed, your changes should be reflected

When your action runs sst deploy its going to create a cloudformation deployment. It will bundle each lambda's code into a zip and create 'assets' in AWS S3 and tell aws/cloudformation "Create/update or delete each of these lambdas within this app to match this configuration i'm giving you and here's the location of the files in S3 that i want you to use for each one. also, please create/update an api gateway with these endpoints, each backed by one of those lambdas."

Sorry if that's not a good explanation. Its all backed by cloudformation so i'd recommend looking into how that works if you want to understand what's going on behind the scenes a little deeper. I'd also be happy to give your code a look this week if you publish publicly, but i have a dayjob so it won't be until at earliest tomorrow evening US timeThank you so much again! all that info is soo valuable to me so i really appreciate it and dont worry, its a very good explanation!

I think i might need to start a new sst project to set up everything there, as of what i understood this would work with SST and also developing offline with serverless framework. Ill try to create a repo for this and give you the link in case you want to see that.

The way it works then is that for each file i create, say for this case

lambdas/deleteUser.ts, i create lambda with its handler and if its pushed to develop/main, it triggers gh actions to deploy using sst and creating the resource on aws (the lambda and also the route in api gateway)?

Also, about gh actions, for my use case where i manage all my lambdas in one repository, it has to work with sst deploy right? isnt it enough to provide a workflow such as this (gotten from a video, the issue i find is that its related only to one lambda, and i need to provide its arn)?

I was looking at drizzle orm examples with aws lambda and the way they handle it is with serverless framework:

https://github.com/drizzle-team/drizzle-orm/tree/main/examples/aws-lambda

The flow i detected is we create the resources, deploy them and then whenever i want to update the lambdas, i run the deploy command again (all using serverless framework). That can come in hand and seems simple but i guess SST provides a better way to do this, integrating it in a more robust way on a repository?

GitHub

drizzle-orm/examples/aws-lambda at main · drizzle-team/drizzle-orm

Headless TypeScript ORM with a head. Runs on Node, Bun and Deno. Lives on the Edge and yes, it's a JavaScript ORM too 😅 - drizzle-team/drizzle-orm

That’s where sls offline comes in, you wouldn’t need to deploy every time. You’d be running sls offline and hitting the endpoints that are running directly on your machine.

Regarding the gh action you posted: that’s a bad way to do it. Sst deploy will basically do that zip and upload, and do the updates to your lambdas to use that uploaded asset. It will handle everything in your functions section of the yml. You won’t have to call the aws cli yourself at all

When i get out of work ill keep having a look into sst and creating that repo so if youd like to take a look at that, though i got one question:

With sst, i whenever i create a lambda (in this case ill say i just create the handler in my repository and add that to the yml file to be pushed and deployed), i then create my branch to push the lambda, say for example

feature/delete-user, that would trigger the pipeline and check for all my lambdas in the repository, updating them even if the sourcecode hasnt been touched? or it checkouts just the lambdas that i've changed and it deploys/updates accordingly?

There is a lot of stuff im just starting to learn about ci/cd and pipelines so i really appreciate your pattience when explaining, thank you for that!Not unless you set up your action to trigger on push to branches with that name.

This piece here in your screenshot above is what determines that.

So this will trigger on push to the branch named "main" if any files were altered that match "src/**" (any file under the src directory), it will run the jobs

So once you merge your feature branch into main, then it would run

That's the advisable way to do things. You don't really want to be deploying to aws every time. If you were working with other people, you'd set up a development environment and an action to deploy to it when branches get merged into the "develop" branch, and then your production environment would get deployed to when you merge the develop branch into main

That way, everyone works on their changes individually on a local environment, then pushes a branch for others to review. When everyone agrees to merge it into develop, you do that. When you're ready to ship to production, you just need to merge develop into main.

It's good practice to even do this on your solo projects. Do a self review on your feature branches. It builds a lot of skill and you can show it off to people during interviews etc. It goes a long way to have those habits and manner of working because any good development team will be using those practices. Your code isn't just what the code is, its the infrastructure & working practices around how you write software.

Yeah, i understand that flow, my question was more oriented to the concept of "On a feature, i can update three lambdas on a repository of 10, does that mean the action will update the three lambdas or the 10"? if i had to put it in a phrase hahaha

Talking to you has been really educating so thank u so much for that, it gives me a lot of insight

as i said im going to work on a repository to set up my lambdas and its deployment with sst and github actions, in that scenario i need to create my resources with SST as IaaC? or SST doesnt provide IaaC and its better to create my stuff on aws console / terraform / any other tool? considering its going to be a project that only uses API gateway and lambda (with drizzle) in terms of backend, i was more inclined to just using something that lets me create those resources and then update them, so i guess sst is the candidate?

Hmm, I think it will still deploy the other ones due to how sst bundles them, but i'd have to check. If you don't materially change the other 7 lambdas I wouldn't worry about it.

I would recommend just leveraging SST to create the api gateway and lambdas. It should do all of what you need.

Where are you hosting the DB? RDS? I haven't used SST for much other than the api gateway, but i know it can configure other services.

as of right now im using supabase to host my db, i've found tutorials connecting to postgres, rds or aurora but havent done so much with it, i assume instead of creating my resources on a vpc/rds with sst i can just link it to my supabase db and everything should work good

I'm happy to help! It's nice to help someone out that is genuinely interested in learning and making something. Oftentimes people just ask questions for the answer and don't care too much about figuring out how to get to the answer themselves, so when I get the opportunity to help someone that cares it makes it really rewarding!

Supabase should work fine for your purposes here!

im so glad to hear that, really! as i said before it has given me a lot of insight and it really really directed me towards what i want to achieve with my project so muuch thanks for your time and patience explaining, it super clear

now it comes the practic part so well, i'll play around till it works and if it doesnt somewhere in the road i hope i can ask again for a little bit of insight about whatever could happen

:popcat:

Best of luck! If you run into any walls drop another question and ping me so I see!

I managed to make it work with sst and everything! yikes

besides of that, i was thinking about something and wanted to share the question with you in case you could help me with an answer, its related to the way this flow works in real scenarios on tech companies

What would be the real scenario on a tech company with senior developers, devops and people working with/on cloud infraestructure in terms of deployment and development of lambda functions / api gateway be? Im a junior and i've been working with aws for a while, but on my work there isnt really a senior whom i can ask how should the real-case scenario flow be like so (really a bad practice as of what i know) on a project we ended up creating all the resources by hand on aws console and modifying the lambda's sourcecode on the console itself D:

as for now i understand the way of doing that is managing the lambdas on a repository, testing them on localhost and then deploying them with ci/cd and github actions, but do we need something (or is strictly necessary to use a framework) in particular in order to do so? like sst/serverless framework, or we just create the resources with terraform/cdk and then manage the lambdas sourcecode on ghub deploying it with ghub actions with a particular pipeline and no framework?

It might be a little redundant considering everything we've been discussing here but its a way to end up the discussion in my mind and bring some clarity

Yea almost no one does this

The modifying it on their console part

yeah, i understand its like the worst way of doing things hahaha

the project where we did that was sadly like the guinea rabbit

I have a friend that test locally and use terraform and jenkins to deploy

So i made a flow in order to understand if i got the idea correctly:"

1. One repository to store my infra (Lambdas, Api Gateway or any resource that was created with my IaaC tool)

2. Ideally another repository to manage my lambda sourcecode? (or it can be done on a specific folder inside the infraestructure repo? -could bring some pipeline problems on push?-)

3. I can test my lambdas locally generating them with serverless or something else, or try pushing to develop and testing it inside aws

4. Whenever i change a lambda, i create a branch for its feature, and whenever its pushed to dev/main it runs a pipeline to update the resource on AWS

5. Whenever i create a lambda, in the other hand, i create a branch for its feature on the infraestructure repo, push it to create the resource, and THEN i need to repeat process 4 for its sourcecode?

That would work, yeah. But i personally would keep them all in a single monorepo

Yeah, that comes to preferences, id also like to do that inside a single repo

i modified the list, as it can represent in a better way my doubts:

1. One repository to store my infra (Lambdas, Api Gateway or any resource that was created with my IaaC tool)

2. Ideally another repository to manage my lambda sourcecode? (or it can be done on a specific folder inside the infraestructure repo? -could bring some pipeline problems on push?-)

3. I can test my lambdas locally generating them with serverless or something else, or try pushing to develop and testing it inside aws

4. Whenever i change a lambda, i create a branch for its feature, and whenever its pushed to dev/main it runs a pipeline to update the resource on AWS -> ¿The pipeline that deploys the changes uses the IaaC tool i used -terraform/cdk/sst-, or its like a "vanilla" pipeline that just zip everything and uploads everything on, say src/*?

5. Whenever i create a lambda, in the other hand, i create a branch for its feature on the infraestructure repo, push it to create the resource, and THEN i need to repeat process 4 for its sourcecode? -> ¿The pipeline that creates the resources uses the IaaC tool i used -terraform/cdk/sst-?

Nah keep your infra and source in the same repo, it’s like half the reason. Plus you want to keep them in sync

I’ve used code between my cdk infra and my lambdas before

I prefer strictly using cdk over Sst, but I can see why Sst makes things simpler. Defining everything in typescript just works better in the long run imo. Right now at work we use a mixture of them and I don’t really like it. The types for api gateway on cdk are pretty mid but you could write a higher level construct to make it easier

ahh i understand, thanks for your answer!, so finally the ci/cd github action depends on which tool to generate your infra are you using? say for example im using terraform, then the pipeline will use terraform for creating/updating lambdas (in my case), or if im using cdk, or sst it would use that?

If you’re using sst you don’t need terraform at all. You just call sst deploy and it will deploy to aws, creating or deleting resources if necessary.

It basically just creates a cloudformation changeset

that also responds my question, thanks!

i was refering to the fact of the pipeline that deploys changes is directly linked to the tool i used to create those resources

in my case, on a project i have lambdas that werent created by any IaaC but on aws console so im thinking a way of being able to manage them on a repository without needing to re-create them using IaaC

but for a case where i start all over again, IaaC + deploys on github actions is something i finally understood

You should just recreate them tbh, there are ways to import them by arn but it’s unnecessary complexity.

Deploying to aws especially once you’re using a tool like sst is essentially free

If you want to treat those lambdas like a 3rd party dependency to your sst app then you can go about it that way, but as far as sst is concerned updating/creating are the same thing. It’s got a set of resources it controls (in a cloudformation stack)