Most optimized download system

Hi, I need to download a file separated into different chunks (between 100 and 400 mb each) from a server and make it as fast as possible. Except that I'm a bit confused, I've seen that there are lots of different methods for downloading files, with the added bonus of possibly downloading more than one at the same time. I was wondering if you had any advice on how to go about it?

The code I'm currently using:

DownloadChunk:

41 Replies

i would handle this quite differently.

first of all, use

HttpClient instead of WebClient.

then, i would try making use of Parallel.For instead of the for loop, or just straight up starting 4 DownloadChunk tasks at once and using Task.WhenAll to await all 4 of them

so potentially something like this

Thank you very much, it may be because I am in .net 4.7 but ReadAsync and WriteAsync also require offset and count arguments

sure, you can use

WriteAsync(buffer, 0, bytesRead)Thanks

After testing it works really well on small files, but for larger ones it shows me the error:

System.OutOfMemoryException, do you know how to fix this?

DownloadChunk code after adjustments:

without knowing what causes the exception, no idea.

also you should not remove

using on the variables which had it

those need to be disposed

why are you on .net 4.7 anyway if i may ask?Because it is one of the last versions included in Windows, if it improves the program I can always update

It is only available from .net 8

this has been a ridiculous reason for years. you can embed the .net runtime in your app.

not correct, i'm not sure where you read this

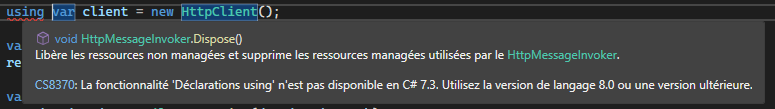

Directly in visual studio (I'm sorry it's in French, it says: The "Using Declarations" functionality is not available in C# 7.3 (it's not my version I don't understand why it says that). Use a version language 8.0 or higher)

language version, not .net version

Will I have problems if I change now?

you can also use full

using statements. you were doing it here.

or bump your language version to latest

or 8

whateverHow can I do it?

migrating from .net framework to .net might be a small hassle, sure. if you have version control set up, it's obviously not going to be an issue.

there might be a setting for it in the vs project properties, i don't know

i don't use vs

i edit project files by hand. which is not gonna be a good idea for netfx project files

"version control"? Like git ?

like git

if something messes up while migrating (i want to say vs has a button for that too), you can just revert

Which version of .net do you recommend?

.net 9, the latest

@ero The migration is complete, so what would be the best method to download now?

what do you need to download?

Chunks

oh, you don't really need to change anything?

make sure you dispose of all the things that are disposable and you're good, really

you could maybe reduce allocations a bit more by renting an array

But you told me to update my .net, wasn't it to improve the download?

not really, no

i asked why you're on .net 4.7. i didn't tell you to update

Wow

I'm really stupid

Well, it will always be useful

What array?

the buffer

Increase DefaultCopyBufferSize ?

not really? you can keep it as big or small as you want, really. the number i chose i just the default

Stream uses in general

but you can probably do

Thank you, but it's like last time, if the chunks are too big it shows me that there are exceptions and does not download (I put the using correctly):

without the exception message or stack trace, this is not useful

it doesn't crash my program so I don't get a message and when I display call stack there is nothing in it

What will this number change on the download?

it definitely should, but i don't know the rest of your code.

Isn't there a log file or something similar that would indicate that?

if you don't log anything, where should such a log file be created?

@ero It's okay, I managed to solve the problem, thank you very much

And I was wondering why don't you make requests to download the chunk in parallel?

Hm?

This code downloads end by end, wouldn't it be possible to ensure that it downloads several ends at the same time?

What do you mean "end by end"?

bit by bit

Well that's what the default buffer size is for

The buffer is simply large enough to fit the whole chunk

I assume anyway

Thanks, I don't know much about it 😅

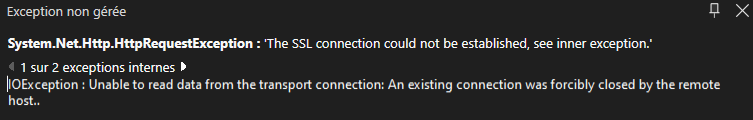

And sometimes I get this error, is it coming from the server?