❔ Need help to optimize this simulation

For context, I'm trying to build a simulation where you start with X "nodes" and every "round", one node is replaced by one of X predetermined possibilities (

.NextNodes, which may be empty) and so on until Xth round is reached.

For example, we could be working with integers and on each round one of the integers we have gets +1 or +2 so if we start with 1 and 3 and play 2 rounds some of the results could be

- 3 (1 + 1 + 1) and 3

- 4 (1 + 1 + 2) and 3

- 3 (1 + 2) and 4 (3 + 1)

...

The aim of the simulation is to compute every possible outcome and so far it works fine with little amount of data or start nodes but if I scale up to a point where I have a few million possibilities I start feeling the slowness and if I go even further it doesn't even finish.

I'm pretty sure the most important thing to do is instantiating far less lists, but I can't figure out how. I also thought about creating one task per initial node and that task would only focus on cases where its initial node is replaced in the first round to parallelize but that wouldn't fix the memory eaten by those many lists.

Here's my actual method :

108 Replies

so if we start with 1 and 3how the hell does that translate into

3(1 + 1 + 1) and 3

well round 1 the 1 gets +1 and round 2 the 2 (that comes from 1 + 1) gets + 1, in the end we've got 3 and 3

okay

what's this for?

It's just in general, I've ended up doing similar algos several times and always met that issue where it works but only with little amount of data

Most of the time bots on CodinGame games

like

point being

Gimme a sec

is this a homework assignment of some kind?

Nah no homework involved

School is far away now 🙂

well, you're definitely right that instantiating fewer lists will help

this is also a prime case for parallelization, as your work is all CPU-bound

As I said I think I should focus on eating less RAM before making it more complicated because when we reach several million possible outcomes it looks like it just stops at some point (around 1.5-2Gb)

you can accomplish both by focusing on building a stateless solve method

for solving a single case

what does a "solution" look like, here?

like

you want to compute how MANY possible outputs there are for a particular set of input nodes?

or you want to compute WHAT all the possibilities are, and list them?

List them

list only the unique ones?

like, there's multiple ways a simulation could play out to produce the same outputs, right?

like a

[1,1] could become a [2,2] by having a +1 on each one, but two different ways

it could go [1,1] => [1,2] => [2,2] or [1,1] => [2,1] => [2,2]

are those different outputs?In the end both lists will be the same (I mean

.SequenceEqual would return true) but I'd keep both in the results yesokay, well

do you WANT those in the results?

if you don't, that's a potential improvement right there

Yes I want those in the results

okay

Obviously not keeping them would remove some lists 🙂

there's still a potential for improvement

cause you can store the results as....

I.E. don't store duplicates, store each possible outcome and a count of the number of times it occurred

so, after computing a PossibleOutcome that you already have, you just increment the counter, and then either let the PossibleOutcome get GC'd, or put it back in a pool to get reused

object/array pooling is the other big point here, I think, for reducing memory usage

beyond that, I'd need to see code

This would be an improvement in theory but since (at least with my current method) all the results are known when you reach depth = 0 and I'd still have the plenty lists issue

uhh..

kinda

but it would be an improvement

like I said

the other obvious improvement to be made is to stop using lists

yeah that's the one I'm looking for

all the lists are the same size, which you know ahead-of-time, use arrays instead

Right, that'll just make me spam arrays instead of lists but at least that's "better" spam ^^

this, but unironically

lists are heavier than arrays

A) unless you're constructing your lists with the

capacity parameter, the list is allocating multiple arrays over its lifetime, and the end result is a list that is extremely lilely to be over-allocated, I.E. allocated larger than what you need

B) each list instance includes more allocations for state variables needed to manage the internal arrayYeah ik the differences or at least the most common ones

Just a bad habit of using lists everywhere

it's not a bad habit, not really

develop for straightforward functionality first

then optimize

generally

Yeah but I mean I don't even think about the "optimize" part because most of the time it doesn't matter in the environments I work on

which is normal and fine

So here's the array version

next big optimization: get rid of that recursion

And go to iterative form instead?

yup

Depth isn't really high in general

true, but it still lets you control memory a little better

and it's going to promote the next optimization, to parallelize this

Arrays made it ~25% faster (may not be accurate at all since I only put a break point on the method call and looked at elapsed time after pressing F10 instead of a proper benchmark)

Iterative looks like that (and is more or less same speed)

there's no way you shouldbbe allocating new lists anywhere in there

also, why do you have a list as an input?

You talking about

currentList right?yes

Hmmm most likely because the recursive had this 🤔 Removing it without just having an other local variable list doesn't seem as easy as I thought it would

okay, I'm actually at my computer now

so

what is the actual goal here?

like, what are the true inputs?

you want to find all ways that this simulation can play out, right?

what are the input parameters for this simulation?

N number of "nodes"

what else?

the number of rounds?

is that the same as the number of nodes?

how about the amount added to a node during a around?

your example was either +1 or +2

is that all?

Understanding how to fix my various simulation algorithms being bad when the number of possible outcomes gets big (millions is where I start feeling the slowness)

No, they aren't related at all

Well it's not directly part of the parameters but ahead of the simulation each nodes knows what it can turn into (cf

.NextNodes)

The simulation method is agnostic, it only knows through .NextNodes what the node may turn into but it isnt aware if (keeping the example) the current node is 1 or 73 or today's date or whatever and if the possible transofrmations are +1, ×19, turn into a random string that contains 37 characters or whateverI meant with regard to the simulation

what are the inputs

Well depth and the initial nodes 🤔 Not sure if I understand your question 😅

okay

what is "depth"?

I mean the number of "rounds"

k

and how do we define node transformations?

You mean how do we compute

.NextNodes?sure

It's computed before, doesn't take long enough to be worth optimizing

so

point being

it's arbitrary

you want to be able to run this simulation for any arbitrary type of node that you want to define

If you mean that it doesn't respect a pattern or whatever, yes

you gave an example where it's integers

you could, say, have each node be a chess board

or pieces on a chess board

And the

.NextNdoes for a board be the board after every possible move yeahk

cracks knuckles

also, you're only interested in the possible final state of each simulation

not how you get there

yes and no, given I want to list all the possible outcomes (possibly dealing with the duplicate outcomes with a dictionnary as you suggested yesterday) I'm not sure I can get rid of intermediate states completely

....

so, yes

I want to list all the possible outcomesyou don't want to list all possible paths to all possible outcomes

Yeah I don't care about the paths themselves, as long as I know I have X paths leading to given outcome I'm fine

k

is

.NextNodes deterministic?

from the current node?You mean like if I have two nodes that are 1 (keeping the integer example), will both have the same

.NextNodes?yes

then yes

crap

okay

moment of truth

nope

alright, I think we're done

that seems to be correct for the "+1 or +2" simulation

for depth 3

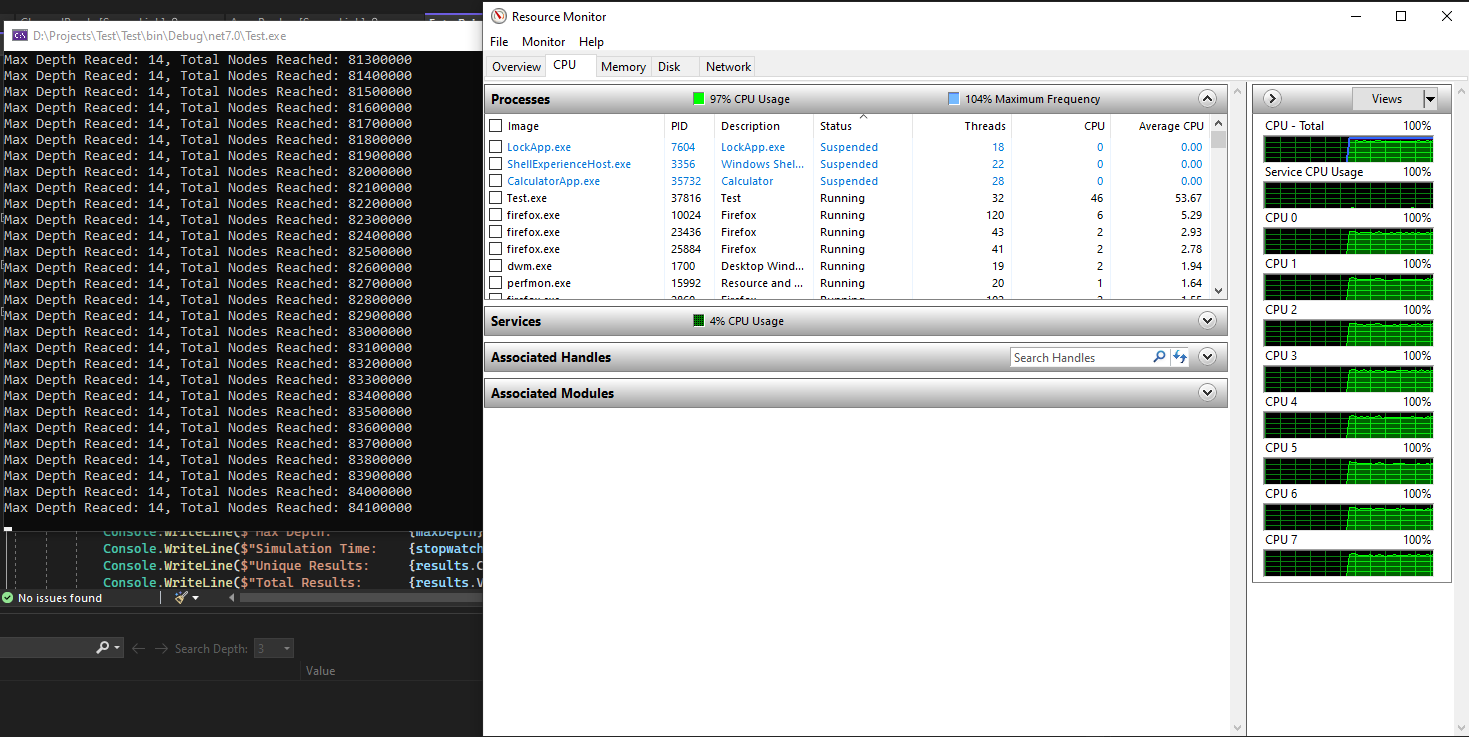

good CPU utilization

1 billion nodes processed...

loooool, GC collections are like a 5 second affair

yeah, so this particular implementation is HEAVILY memory-bound

CPU utilization drops below 50% if I put a limit on how many node sets can be queued up for processing, at a time

that is, this particular implementation of a simulation

where calculating next nodes is just a couple of int additions

so long as the average node number of forward nodes that any node has is greater than one, memory use will grow exponentially

might not actually be worth all the parallelism, then

I'll have to run the next test with memory throttling, both with and without parallelism

much better with release build and no debugger

okay, that is noooooooooooooot finishing, with the memory throttling in place

might've even deadlocked, I dunno

yeah, parallelism is doing more harm than good here

Hmmm you reached a higher number of results than I do, I tend to be stuck (because of the memory issues) at around 50m results (should probably try in release and outside of vs to see if it helps going furether)

the memory is definitely an issue here

I max out my 32GB

Oh ok that explains 😄

I think I know how to approach it better, but I also think that approach will eliminate any possibility of parallelism

Those damn lists 😬

which I was rather going for in the first place

no lists used

all arrays

with pooling

oh my

blazebin seems to be busted on Firefox

Well l guess we can start from here and see afterwards 🤷♂️

BlazeBin - wojbxyvhasvo

A tool for sharing your source code with the world!

Ok that looks too complicated to look at on phone 😅 Brb when my pc is ready

heh

doesn't help it's all shoved into one file

Yeah but on pc I can copy paste on different files and make it easier to browse 😁

If I understand correctly, your version computes transformation results on the fly and always applies the same transformations (+1 or +2)?

that was the example you proposed

but yes, that's definitely the way you want to do it

A) you avoid needing an allocation to store the next nodes for each node, because it gets deferred to the simulator

you can generate the nodes directly into the simulator's already-allocated queue for processing

B) you don't und up running the next-nodes calculation an extra billion times, for all the max-depth nodes that won't use them

I'm not sure if I understand this, I mean using my initial implementation you don't compute next nodes billion times (well if we use the integer example and keep it unbounded, you have to process every integer so still a lot), if say there are million or even billion possible outcomes but only X possible nodes, you'll just compute next nodes X times. While if your implementation meets (back to the integer example) a 3 two times, it'll compute the 3's next nodes twice right?

I'm referring to your implementation of .NextNodes

which, admittedly, I haven't look at, but you said it's pre-calculated

if the final result of 15 rounds has 1 billion result nodes, then you are pre-calculating the .NextNodes property of each of those 1 billion nodes, even though you're not going to navigate those .NextNodes valued

is that not accurate?

I don't think so

As I said, the integers example is not really good for this because they can go as far as you like but say we're sure we'll never haves node that go above 10 and below 0

Pre-calculation will determine what the possible NextNodes are for 0, 1, 2, 3... up to but 10. Say every node's NextNodes are either the node with +1 or the node with +2 (to keep the same transformations as before) and if that'd make you go above 10, start from 0 (so 10's NextNodes are the nodes that contain 0 and 1)

Here you'd only compute .NextNodes 11 times

so, .NextNodes is pre-calculated, and also pulled from a shared cache?

Hmmm yeah

mm-kay

Not really related but I've tried a Stack<T> based approach to try fixing memory issues by not computing every outcome simultaneously but it's not completely working atm, what do you think?

a

Stack<T> would definitely be appropriate for tracking iteration progressYeah that's kind of what I do atm, even if I think I do it pretty bad cause the Stack actually stores the index of the transformed node, the index of the next nodes and the node array representing the current state

well, that runs significantly better, but it's also wrong...

there we go

betcha I can do better

You used Stack<T>? Or just improved you previous alg?

rewrote it a fair bit

it's now essentially allocation-free

whole program peaked at 11MB

BlazeBin - sxuwtfmplhcm

A tool for sharing your source code with the world!

Played around with your impl a little, it crashes when it meets a dead-end (node with no next nodes)

On the other hand, cool thing is it can be used with pre-calculated .NextNodes (even if I guess your caching system probably becomes unneeded since the cache is already in the pre-calc) pretty easily since I just have to implement like that

yeah

looks like adding

Before the line it crashes on fixes it, but given I don't have a full understanding of your code yet, not sure if it's enough/doesn't break other stuff

Was this issue resolved? If so, run

/close - otherwise I will mark this as stale and this post will be archived until there is new activity.Looks like it's still bugged when actual depth is lower than

maxDepth, at that point it returns 0 resultsso, the algorithm goes straight from retrieving the set of next nodes, into doing the replacement for the first one, and checking it for either being a solution, or being a new depth to explore

you just need a bounds check there

if the set of next nodes is empty, skip to the next loop iteration

I.E. wrap the whole logic for the nextNodes sub-loop in a bounds check

although, doing that breaks the goto

maybe we can get rid of the goto actually

meh, even simpler

more gotos!

Was this issue resolved? If so, run

/close - otherwise I will mark this as stale and this post will be archived until there is new activity.Don't see how it fixes the actual depth lower than max depth problem given results are still built only when maxDepth is reached 🤔

ahhhhh, another good point

Normally you would probably apply Alpha–beta pruning to this problem but because you don't really have a goal you can't really do that.

Still a fun problem though.

yeah, I had a problem a while back kinda like this that I solved REALLY efficiently, with parallelization and everything

but the solution there only worked because the whole problem was much more, uh.... predictable

like, I can know ahead of time exactly how many possible solutions there are

so, what I was able to do was literally for loop over all possible solutions from 0 to N

and mathematically derive which possible solution is to be tested from that index value

the early-termination angle for this problem makes it much tougher

I mean, I could definitely approach this problem the same way, but it would be absurd

it would be "loop over all possible permutations of nodes that could be a final result, and then walk backwards to see if it's reachable from the starting point, and how many times"

you'd end up doing WAY more work

Yeah, this "simulation" is kinda a worst case scenario lol.

By "WAY more work", do you mean we'd back to the point where I was before asking? aka spamming lists so much that I run out of memory?

just more redundant work

recalculating and re-traversing chunks of the graph that you've already done before

Yeah or maybe try storing potential results for depth X and then trying if depth X +1 exists until either depth X + 1 doesn't exist or maxDepth is reached 🤔 Though that sounds like the starting approach that was traversing a whole depth before going to the next one

Was this issue resolved? If so, run

/close - otherwise I will mark this as stale and this post will be archived until there is new activity.Kind of workarounded it by restarting the sim with depth -1 when it returns no results

Was this issue resolved? If so, run

/close - otherwise I will mark this as stale and this post will be archived until there is new activity.