Garbage Collection is running, but it’s running extremely slow

Hey everyone! Quick question: I’m still struggling with garbage collection on my server. Every so often my server will randomly drop GBs of RAM usage, which is great! But, 9/10 times it’s after the server has reached a completely untenable amount of RAM usage.

I made a post recently about RAM issues, and decided to fork over the extra $5 to increase RAM on my server by 2 GB, for a total of 8. I’m also limiting the server to 7 GB of usage overall for overhead.

However, RAM usage is still running extremely high until GC kicks in. Something I’ve noticed as well is running a spark heap dump seems to clear out a ton of RAM as well.

Am I going nuts, or have I done something wrong in my startup flag configuration?

Flags are as follows (side note: host won’t let me changed Xms):

14 Replies

Thanks for asking your question!

Make sure to provide as much helpful information as possible such as logs/what you tried and what your exact issue is

Make sure to mark solved when issue is solved!!!

/close

!close

!solved

!answered

Requested by terrorbytetw#0

I would rather lag spikes caused by GC than OOM crashes caused by, well, no GC. However, in an ideal world, neither would be best

memory usage on panel is not fully correct

it shows reserved ram

not actual usage

spark/essentials can give u a more correct number

Okay, but what about the huge drop in RAM usage that the panel also shows? What’s going on there, and why does running /spark heapdump coincide? Just wanting to learn what I can to avoid OOM crashes 🙂

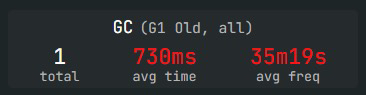

if ur server crashes due to oom, startup flags are usually the cause, else i wouldnt worry too much while its not great to see gc old, the lag spike is not even a second long and that is every 35 minutes

Sure, makes sense. Any idea for my question in my message above?

well it might clear out some of the reserved ram on gc, which is why it coincides

So, /spark heapdump also triggers garbage collection?

yes

thats kinda what it has to do

it dumps everything in memory

Ahh, interesting. Okay, well I’ll keep an eye on my server but I think if Pterodactyl is going to report reserved instead of actively used, I’ll just rely on Essential’s /mem or Spark’s /spark healthreport. Thanks!

indeed those 2 methods show actual usage ;P

if ur server crashes due to oom, startup flags are usually the causedo mind this so if it happens feel free to come back

Thank you so much! I’m hoping it doesn’t crash anymore lol

np!

As an update to this, I had to end up ditching Aikars flags. I tried my best, but I just couldn't justify using them with all the crashing. I switched to ZGC flags instead recommended to me by my panel (My panel has settings to switch from Aikar, ZGC, and default). My startup flags are now:

Yes, this is running garbage collection every 30 seconds. It was originally running every 5, and it was noticable in CPU usage but not in TPS. I was still getting a solid 20.0 TPS according to Spark, but I didn't like seeing the attached screenshot every 5 seconds. Either way, seems to be great now. I'll keep an eye on it