Won't tag missing images

I stopped all docker containers when 4k images where still missing.

After restarting, none are analyzing and pressing the missing button won't analyze the 4k missing.

Which logs should I look at?

128 Replies

I also deleted the redis container and did docker up

Try to run tags for all images

that's what I did before stopping all containers. I wanted to avoid that because I have +10k photos and 6k took about 12h

(I'm using 2 CPU in the docker compose file because if not, my raspberry pi crashes)

Is there no way to delete all the saved tags so next time I stop the containers, it knows where it left off?

It should know unless all are tagged

Please check the server and microservices log when you click the running missing tag option

No logs appear

^when clicking missing

just the logs when it started

server

micro

something wrong with the redis container on your end

so it cannot queue the asset with missing tag

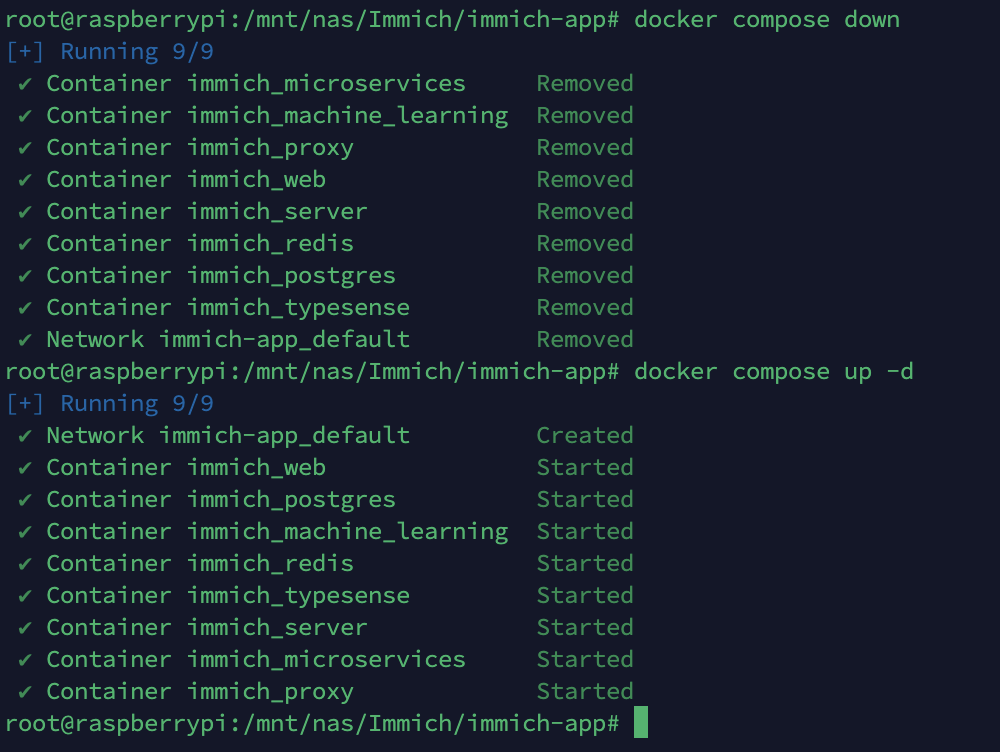

bring down all containers and remove all containers then bring them backup

I did that before, I'm going to do it again

Still not analyzing missing tags

Is the redis error still in the logs?

redis

server

micro

As long as you have that redis error jobs are not working

Can you post your docker compose file and env?

The only changes I've made have been to the docker compose ML

Does your system take awhile to start up?

No, everything is pretty fast

Then why does immich-microservices fail to connect to redis?

If you just restart immich-microservices do it still hvae a connection error?

You know what

Based on this it looks like it took 10 minutes for reverse geocoding to initialize

I think that is two separate instances

Oh, wait. No, there is a 10 minute gap between reverse geocoding initializing (finished) and the redis erro

Reverse Gecoding Initizlied message marked the end of the init process

Did it try to wait for something for 10 minutes before throwing those connection errors and then moving on with the startup sequencec?

I was re-analyzing all images. In the images you can see how I restarted the redis container

so it was working, but only when I started analyzing all images

wrong container

Oh, it was working, and then you restarted redis, and then you got the logs in microservices for redis unreachable?

yes

What is the status now? On a stack restart does redis come up successfully and do you see redis connection errors in the microservices container?

no more logs are appearing ^ so thats all I get

You just restarted it or what?

restarted redis @ 7:44:35 (aprox)

restarted micro @ 7:48:00

Looks like micro is still starting

But the next lines in the logs are probably going to be "unable to connect to redis"

You can run docker logs immich-microservices --follow btw

yeap, probably in 10 mins, looking at the old logs

Pretty weird

If you connect to the container you should be able to ping the redis container

how do I enter the container again? I forgot

This is pretty weird. The microservices container should use docker networking to resolve the redis hostname

And if redis is up and running things should just work, don't know why there are these weird connection errors.

Have you done anything unusual?

You are running docker compose up to bring up the stack?

Also, immich-server also connects to redis and that seems to be working fine.

docker compose up -d

also a weird thing I've seen is that it analyzes +10k images, while I only have +3k. Is it also analyzing the thumbnails?

There are some tags, but not all images have been analyzed.

uhmmm actually any search shows those 3 images. no matter what I type

re: "it analyzes +10k images" - there are actually two object tagging processes so it makes sense that it would be x2 the total number of assets.

If you restart and re-queue them all a second time, you might have also requeued them all again.

no more error messages since then

Any messages at all?

nothing, same as before

I think what you need to do is:

1. Get everything up and running (no redis errors)

2. Re-queue object detection and clip for missing

3. Wait for them to finish and then test out explore/search

Can you run

docker-compose up -d --force-recreate redis

I'm going to reboot my rpi, ram ussage is almost full (4gb)

4GB of ram might be hitting the limit when we add in the facial recognition

can I opt-out of facial recognition but still have the current ml?

Maybe, we can put in a flag for that

for search to work nicely you only need CLIP encoding

the object and image classification isn't what make search unique in Immich

In general, we'll need to re-visit the memory/job structure for all this ML stuff. It should be possible to make it work on the pi, albeit slowly. Currently it just uses a bunch of resources and multiple ML jobs run in parallel.

After rebooting I'm still getting the error

can you remove all the Immich related container?

then bring it up with

docker-compose upremove all these?

correct

docker-compose down would do it.

make sure remove the container, don't remove the volume

Actually, I'm looking at my microservices logs

Reverse Geocoding Initialized is the last thing I see. Maybe your microservices container is up and running fine.down and up again

there seem to be no errors 👀

Looks great

Do you want to try to queue the object detection job for missing?

but pressing on the missing still doesn't analyze all

The 1 job is to queue all the rest, so that's expected.

Give it a few

after about 2 seconds I see this

Are there no images missing tags?

How would I check that?

You could do a database query

How big is your library?

3k images

Just checked if there were clips missing, and I think all were missing

do I have to run clips before tags?

They are separate

Tags populates some stuff in explore, clip is purely for better quality search results.

how?

Connect to the database and run a query 🙂

You can run it on the container itself or expose the port and use an independent db client

rpi crashed 😔

I ran the clips job completely. But when I click on missing, it starts all over

I feel like it's not saving the state of each image

I'm actually not really sure what happened.

When I search for things, it now shows the correct images. If I search for

sea I see sea photos. So it must have done something right

3003 is machine learning. Your cpu might be maxed out

I'm limiting it to 2/4 cpu cores. So cpu usage is about 50%

Otherwise it was crashing my pi too often

Honestly running ml on the pi is a challenge. We have an item in our backlog to improve the usability of ML on the pi and other lower end devices. Basically improve how many jobs run concurrently. I think it is too high for your hardware.

We also have an issue with high memory usage for ML, which is also a WIP

The ML models are pretty large and are never unloaded so memory is always high

You can definitely keep trying to run missing clip over and over, but it might be easier to either wait for a future release or run ML on another machine

yeah, I can imagine. I think that for now I'm just going to run Immich without ML. It is a really neat feature, but more powerful hardware is definitively needed

You can offload ML to another machine and just periodically run missing when it is turned on.

interesting... I might try it out

I have a desktop workstation that i used when running a job in all assets, especially the initial load.

So my more powerful pc would have the ML container, with ports available to my local network?

just the immich_machine_learning container?

You can set the immich machine url env to the ip and port of your other PC, that PC just needs the one port open, probably 3003

And then just docker run command for the ML container. The only caveat is you do need to mount upload location to the container still. Hopefully it's a Nas that's available on the other pc

yeah, it's a nas

So if I understood it correctly, change the ML url from the .env here:

the on my windows machine, start the ml container, with open ports

Oh but how do I tell the windows machine where the location is?

I'll use docker desktop, I'll have to set the env vars there, right?

@jrasm91 does this look right to you?

I also don't really know how I'd add the user and password to the nas

UPLOAD_LOCATION=//192.168.1.49/Max/Immich/immich-app/immich-data

and the docker compose like this:

I'm now opening port 3003 on my windows firewall. Is ML TCP or UDP?

Getting this error on the ML container

I'm stuck here. I'm actually surprised how far I made it hahahadoes this mean I didn't mount the nas correctly?

upload folder shows nothing

Looks like the mount is missing

It is TCP 3003

So it's able to receive a job and the file is just missing?

That's like 95% there.

What command did you use to mount it?

Oh the volume mount is backwards

You don't need the env file here

Left side is your Nas path then colon then the right side needs to be untouched (/usr/src/app/upload)

it's like that I believe

- ${UPLOAD_LOCATION}:/usr/src/app/upload:cifs,username=${NAS_USERNAME},password=${NAS_PASSWORD},sec=ntlm,vers=2.0,0,0What you sent was backwards

I've been writing extra things because I was testing

Left of the colon is Nas path accessible from the host

yeah,

UPLOAD_LOCATION=//192.168.1.49/Max/Immich/immich-app/immich-data

so technically it's on the left, correct?Oh ok, yes

maybe I'm not understanding what you mean 😅

Idk if you can use username in a volume mount

I think I had to add an fstab entry

ok, I'm more familiar with that :)

The right side needs to be /usr/src/app/upload exactly though

do I delete that line if I mount the nas inside the container?

Not sure what you mean

Left side is host right side of container

But if I'm mounting the nas inside the container. I shouldn't have to specify it in the docker compose

How are you mounting it?

You usually mount it in the compose via the line in compose

I was saying fstab on the host and use that on the left side in the compose file

inside docker

mount -t cifs //192.168.1.49/Max/Immich/immich-app/immich-data /usr/src/app/upload -o username=***,password=***,vers=2.0,0,0

With 0 luckNah I would just focus on volume mount in docker compose

pfff im loosing my mind.

shouldn't this be working?

Nooooooo lol

Add an fstab mount on the host at the location /media/nas

Then in compose put /media/nas/immich:/usr/src/app/upload

No cifs shares with ip

the gist is, having the mount operation separately, handle by the OS fstab, then after confirming that it is mounted correctly in term of a directory in which you can access from the Pi, then use that directory for Immich

aaaaah

I'm on windows, so I'm not sure if that's possible 💀

Then you use samba to mount first

I'm mounting it on drive N

UPLOAD_LOCATION=N:/Immich/immich-app/immich-data

Still no success

Am I writing the path correctly?go to the folder in explorer

copy the address from the address bar

I'm not sure what type of slashes I have to use in the .env

/ or \ or \\ or capital letter drive

Medium

How to bind to a Windows Share using CIFS in Docker

Docker is great for building stateless, ephemeral services but sometimes these services need to access data outside the container. This…

I think cifs on docker on windows is a bit tricky

Stack Overflow

SMB/CIFS volume in Docker-compose on Windows

I have a NAS with a shared CIFS/SMB share that I would like to mount as a volume using docker-compose on Windows.

I have read through multiple suggestions (for instance using plugins, mounting it in

It looks like it's possible

I'm just going to use my mac. I'm so done with windows...

maybe use wsl2?

bruh, mac was just plug and play. already working

which container is in charge of transcoding video? I also want to ofload that one

microservices

That one is a little more tricky. It subscribes to the redis queue, so you will need to run the microservices container with the Redis variables set to the IP and port of the service running on your Nas. The Redis port isn't exposed by default so you will need to expose it