Unable to perform multi-part upload for files

Hi all,

I'm currently trying to use a worker to perform a multipart upload of a file on my front-end. Since my website is hosted on vercel, I have no choice but to tunnel my request through their APIs in order to reach my worker. As a result, I have a hard 8mb limit on the size of my function body.

However, when I try to perform a multi-part upload, I get the following error

It seems to me like the individual upload portions succeed but then when it comes to the final step of completing the multipart upload, I get a 400 error because of the error that

completeMultiPartUpload throws.

I'm a bit confused as to how I might be able to fix this. I've tried to modify my partition function so that the last element is always at least 5MB. I originally started slicing from the front (Eg. 12mb file becomes 5 5 2 and the new algorithm would slice it as 2 5 5 ).

Any help would be much appreciated. I am using NextJS with typescript and am happy to share source code if needed.8 Replies

For context, the object I am testing this on is ~60mb. I generated chunks manually using the

.slice function on the File object using the following code

This yields around 13 chunks which each are 5242880 bytes in size and a last one which is 101232 in size.I originally started slicing from the front (Eg. 12mb file becomes 5 5 2 and the new algorithm would slice it as 2 5 5 ).You'd want the original slicing

So I reverted back to the original algorithm I used

But it still throws the same error that

I'm using the same worker provided in the docs too haha

How big was that file overall?

It's around 60mb

Have been using the same file to test

But i'm getting the same error on my local wrangler server and my deployed worker

File gets broken up into 13 5.24 mb chunks and 1.01 mb chunk.

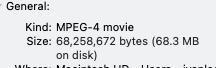

Seems like its around 68.3 mb

No idea - my only possible guess would be there's an issue with your actual UploadPart operations and the multipart upload on R2 isn't right

Hmm, ok nvm I will try working with the s3 api first and see if that works out

Thanks so much @kiannh 🙂