what do you mean by that

what do you mean by that

104 Replies

Let me try to explain better 🙂 Example, starting from https://civitai.com/gallery/59757?modelId=5657&modelVersionId=6582&infinite=false&returnUrl=%2Fmodels%2F5657%2Fvinteprotogenmix-v10

using my merged checkpoint (that model -> my checkpoint -> sd15)

keep the exact prompt and seed, and it's fairly close:

wow very nice

BUT

but getting your face hard

yes! 🙂

that is whats up with those models

they get generic things

it always wants to come back w/ basically that kind of face, the style is lost etc

i still see decent image though

tried to generate hundreds of images to see?

tested cfg prompt strenght multiple values?

Hmm. No, i always forget that! darn

let me do so 🙂

i was trying to recreate something like:

originally

same model start

let me do x/y

also I couldn't figure this out from your video,

now it's x/y/z

and

you get one image across all the grids and strength

i get one image per vertical axis

so it's very hard to compare

i have shown it

in multiple videos

x/y

same x/y/z

i set x to S/R, y to cfg

and it's one seed per column it seems, even if i set a seed. I don't know, it's not a huge deal anyway 🙂

starting a grid run now, let us see. oh I joined Patreon, not sure how to map it here but my initials are R.G. 🙂

awesome ty so much

do you want me to give you patron role?

i can help you x/y plot via anydesk if you want

sadly i can't share this computer, work stuff. But I will certainly take various questions answers in the future 🙂 Role, sure, is up to you. Happy to support

also am running these on M1 Max Mac which works fine, not sure if it matters much if the computations complete successfully ? 🙂

it shouldnt matter

as long as you are not using incorrect xformers

i suppose you cant use it

on mac

i can enable, but i sometimes forget to enable/disabel

very good

this is the test you need

before merging checkpoint

did you test best checkpoint

ah well this is from merged already 🙂 that being me

my face

ye i know

but before merging

you should check

which checkpoint works best

oh you mean best steps checkpoint

?

yes

messing around with the checkopints it felt like my 3000 steps one was better than 2700 but let me check my memory against actual results 🙂

afk for a while

If i somewhat understand things, if my ckpt is too strong, it would be overtaking the style of the other model

you can reduce its combine strength

or use lesser step checkpoint

this is my 2700 steps at .9 merge strength

i don't think good enough, so perhaps I will do my 3000 steps at .75 instead of .9

really the "right" way to do this is just train against the actual model right? 🙂

ie. train against protogen or whatever

your settings good except vae

there is better vae

on that model

you can try training textual inversion

on custom models it works better than dreambooth

but maybe dreambooth better than before on custom models need testing :d

oh woah i didn't notice that was 56000 , i had 84000 also but didn't pick it

84k better

this is custom-> my 3000 step -> sd 15 strength .75 w/ the 56000 vae accidentally baked

i think the ckpt is sort of good, 7.0 1.0/1.1 maybe 1.2

but i am open to whatever you recommend as best current way to train 🙂

20 images, you know my end goal, put my face into fancy styles like you and (i forget his name but I pasted the screen earlier)

looks like cfg 7 best

yeah

i think you can already generate good ones with this merged

to me that means probably it's "good", i.e. i don't have to push weights or cfg high?

prompts making huge difference

👍

Portrait of a handsome confident young (rgdw person:weight_val), rimlight, geometric pattern on the clothes, colorful background

let me test your prompt on my model

can you type negative too

I am doing this one now, sorry i accidentally lost the previous:

prompt:

centered detailed portrait of a (rgdw person:weight_val), vibrant peacock feathers, intricate, elegant, highly detailed, digital painting, artstation, smooth, sharp focus, illustration, illuminated lines, outrun, vaporware, intricate venetian patterns, cyberpunk darksynth, by audrey kawasaki and ilya kuvshinov and alphonse mucha, award winning, ultra realistic, octane render

negative: canvas frame, cartoon, 3d, ((disfigured)), ((bad art)), ((deformed)),((extra limbs)), ((extra barrel)),((close up)),((b&w)), weird colors, blurry, (((duplicate))), ((morbid)), ((mutilated)), [out of frame], extra fingers, mutated hands, ((poorly drawn hands)), ((poorly drawn face)), (((mutation))), (((deformed))), ((ugly)), blurry, ((bad anatomy)), (((bad proportions))), ((extra limbs)), cloned face, (((disfigured))), out of frame, ugly, extra limbs, (bad anatomy), gross proportions, (malformed limbs), ((missing arms)), ((missing legs)), (((extra arms))), (((extra legs))), mutated hands, (fused fingers), (too many fingers), (((long neck))), (((tripod))), (((tube))), Photoshop, video game, ugly, tiling, poorly drawn hands, poorly drawn feet, poorly drawn face, out of frame, mutation, mutated, extra limbs, extra legs, extra arms, disfigured, deformed, cross-eye, body out of frame, blurry, bad art, bad anatomy, 3d render, spots on neck, cropped, out of frame

seed 2346951780

DDIM (b/c that's what I saw, but I don't have a real knowledge of which is right when)

weight_val,1.0,1.1,1.2,1.3,1.4

7,8,9,10,11

20 stepsmy results with same seed

i use eular a

and i don't remember what vae i baked in this one 😦

woah

random seed

which model you were merging i will try it

those are much ... i feel it "understands" you better

your training

by the way this is 50 epoch training

from my new video

VinteProtogenMix V1.0 | Stable Diffusion Checkpoint | Civitai

this model makes a colorful pictures. crisp sharp colors, character also looks very nice.was merged22h vintedois-diffusion-v0-1 + 1(protogenV22AnimeOffi_22 - v1-5-pruned-emaonly)+ vintedois-diffusion-v0-1+ Protogen v2.2 (Anime) Official ReleaseVAE not required.

wow

i get confused on the epoch calculation but i think mine is more at any rate

explained it in new video

20 images * 3000 steps (i think...200 class)

clearly several times :d

how perfect! 🙂

i wonder if you might look https://gist.github.com/rtgoodwin/2b833f58ecac12c72f82963314c4145d

Gist

rgdw man 50x classification

rgdw man 50x classification. GitHub Gist: instantly share code, notes, and snippets.

i already kicked off training but if you see something awful i would stop it maybe

i might have to stop after class image generation anyway to drive to work 🙂

differences from past: 50x, bf16, set vae in main UI (not specified in training, not sure if matters)

don't know if it's the vae but the class images look really good for a change! 🙂

I might write a script to capture as many details as one can about training env and model bc I’m always forgetting and would like to be able to just drop some file in and go. Unless something exist already.Ike model yaml and json, package versions, SD settings probably hmm

i think you can generate class images on txt2img tab

it is faster

then put them into a folder

and give that folder path

i couldn't figure how to do all of them at once...there is batch (count? the bottom one) but honeslty i wasn't sure if I could just put 1700 there 🙂

i wonder if it's possible to make a docker image for all this to pull into runpod... something to play with later 🙂

dont have experience with dockers :d but i can connect your computer and show

no worries i have docker experience! 🙂 maybe can help everyone out. but that's just for getting the environment all set up, maybe inject default settings

I love runpod, just keeps on chugging while i was off doing a work presentation!

pick higher ram machine helps a lot

that's good yeah?

yep

best one

well, it sorta went ok. 🙂 permission to dm?

actually trying shivam trainer now again, high RAM premium GPU colab. 6000 steps (why not 🙂 ) 50x. bf16 seems to work, no 8bit adam. Pruned my training images to reduce similar. 🤞

errr fp16

what is the model you used?

if that branch dont exists you need to chnage

it was runway 1-5. Again very odd results. at 1000 steps even (28 instance, 1652 class, 1 size batch....58 steps per epoch? that would be 17 epoch?)

some beautiful images then just regular photo of me. Or maybe that was 1400. At 1000 actually i think it's all images but it learned me as having big pink lips which maybe came form doing "person" not "man" 🙂

or is it just steps / instance, so 1000 / 28 = 35 epoch

so i think I will go back to 800 model and remove the 1 or two photos that imply i had lip surgery 🙂 or maybe just start over w/o them. It's not bad, just 2 'photos' break through in that batch

i think this new incoming video will help you

i have compared 10 different settings

oh wow that all sounds very exciting!

btw unless it's not recommended highly, I think it's a wonderful hack to use output pics from overtrained model (where it is just a perfect made-up pic of me) for inference 🙂

yep some people prefer this too

Stable Diffusion 🎨 AI Art (@DiffusionPics)

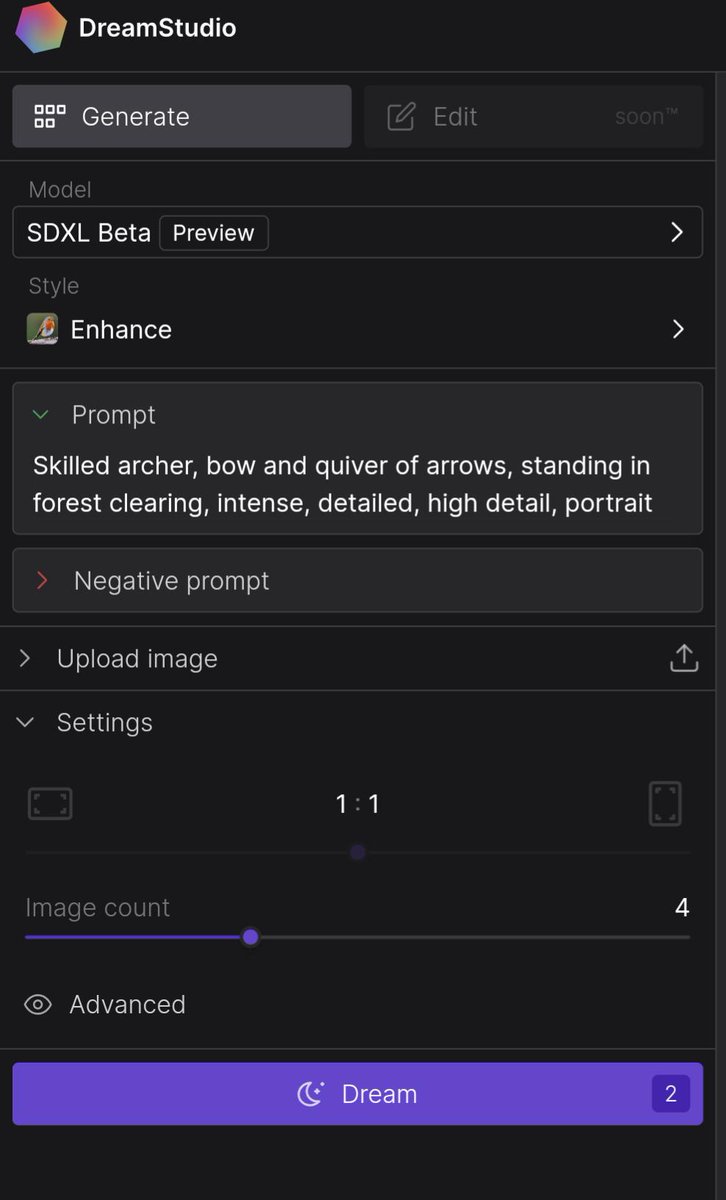

Stable Diffusion XL preview is now on DreamStudio! Will be released after training is complete. #AIArt

#StableDiffusion2 / #StableDiffusion

Twitter

looks like stable diffusionXL is coming soon

wow nice

Joe Penna (@MysteryGuitarM)

@EMostaque all straight out of the model

no tricks, no inits

Likes

116

Twitter

SD XL images

its able to do different aspect ratios

some of these are super wide aspect

in the thread

looks like it may be resolution independent for training, which would be incredible

from the thread: super wide aspect

amazing

Hi! I´m working on a project and need HD 16:9 Aspect, I´m working on a 640 x 384 resolution and upscaling to a X3 value in the output settings but the result is verry bad, very cartoonish and ultra stilyzed and I´m looking a more photorealistic vibe. Is there a way to ad Upscale Models to Deforum, by default there are just 3 models. THANKS !

use ultra sharp upscaler

it is best

is your 640 x 384 good?

Hi Dr. Thanks for answering! No, 640x384 is good for testing prompts and values but not for final export. I don´t see the Ultra Shar option on the Upscale model in Output : only this 3 optios appear: realesr-animevideov3 ---- realesr x4plus --- realesr x4plus-anime

you have to download it

i explained in multiple videos :d

Ho! Thanks!

I'll look for it

Is there an specific video? I have seen almost all of them and don't remember learning about ultra sharp upscaler

this is a technique that you can use

WOW! Awesome! Thank you

💯

It appears that Ultrashar only works for still images and not for video.

UltraSharp*

yes it works for images