file.json.gz - SyntaxError unexpected end of data at line 1 column 1 of the JSON data

Hi guys, first I want to apologize if this question is extremely stupid but I have no clue how would I even begin to solve this issue.

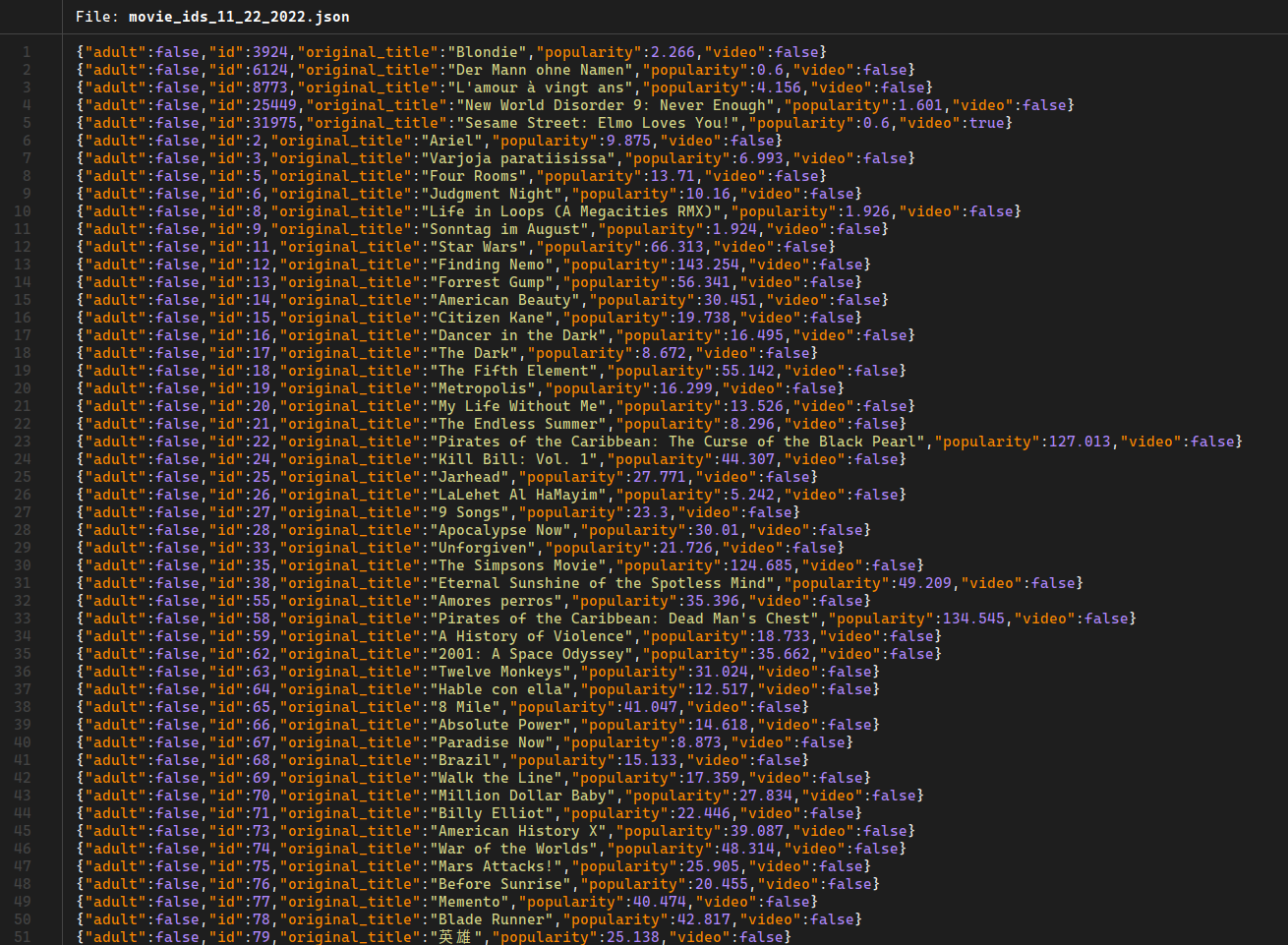

Anyway, I am fetching a file export from The Movie DB. http://files.tmdb.org/p/exports/movie_ids_11_22_2022.json.gz

If you open this file you will see that its a json with objects that are not seperated with commas, therefor I can not "extract" stuff out of them.

I am doing all of this in React with package called Pako which essentially handles the zip, right.

code:

Do you have any idea how to seperate all of those objects with commas so I can read stuff out of them?

Its my first question here so if there is a code formatter sorry for not figuring out where it is 😄

And thanks!!

20 Replies

oh and yes, this is a urls const:

const urls = [

'http://files.tmdb.org/p/exports/movie_ids_11_22_2022.json.gz',

]

please use markdown code block for code

```ts

code here

```

It's hard to read code without proper highlighting and monospace font

I think you're using wrong decompression algorithm, try

pako.ungzipI edited it @Ronan thank you, since I didnt know how to markdown the code 😄

Ok Imma try this

I tried pako.ugnzip but still the same syntaxerror

i think I am accessing the file, and its decompressed but it cant read the json properly because no commas

i unzipped it manually

it does not seem to be valid json on its own but i am going to double check

yea i dont think its a valoid json export

yes, looks like you need

its also like a 75MB json so u may need to test whats optimal

if you can get it in a different format like CSV it might help out

or just use their json api if thats applicable to your usecase

Guys, thank you so much. I am not home currently but I will try this once I get my hands on code.

I really apperciate your time investment!!

And no.. i need these files because there is a popularity value on each movie that changes every day

And i need to have files from 5 days in the past so o can have 5 dofferent popularity values to use in chart

nice

Sorry I am fat fingering my phone keyboard

thats a nice idea for a project

ill add it to my never ending list of things i havent done

Hahahaha

Yeah thanks, Its rly nice.. you can check https://marko-movie-app.netlify.app

Movie App

Web site created using create-react-app

Its cool haha

ah yes i've seen these demos

https://movies.nuxt.space/

and the solid one is pretty insane

https://solid-movies.app/ i need to dig into this one on why it feels so good

Nuxt Movies

A TMDB client built with Nuxt Image to show the potential of it ✨

Mm these look cool yeah

Yeah this project is for my personal education

Its fun to run into problems like this one for instance

Yea for sure the ones listed are showcases for frameworks to sort of be like "look what I can do"

Seems like you know your stuff and its why im telling that this gices the same result (syntaxerror) 😦

Ehhhhh this is a tough one

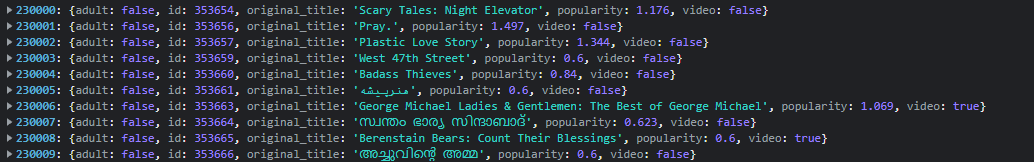

Hey Cro, I've got a weird solution for you

tested working in chrome

As of right now, I've got it to get parsed and show as such

Woah, yeah man!!! It works!! I managed to do it too yesterday but not 100%, i got.output but weird, thisnis much better thank you duuud